In a recent blog post, Anthropic warned of the 'major threat' that is chip smuggling. It claims China has "established sophisticated smuggling operations, with documented cases involving hundreds of millions of dollars worth of chips". This leads Anthropic to argue that export enforcement needs increased funding, and that the tier system introduced in the 'AI Diffusion Rule' needs adjusting to allow tier 2 countries better access to technology, alongside more lenient rules for tier 2 countries.

Effectively, the AI Diffusion Rule, which takes effect on May 15, would prioritise allies of America for the control of advanced AI chips, and any chips being smuggled into China would subvert the aims of that rule.

For some context on those specific methods for smuggling, a woman was caught after smuggling 200 CPUs in a prosthetic belly back in 2022. Then, in 2023, two men were found smuggling GPUs into China alongside live lobsters. These aren't just random claims on behalf of Anthropic, it's citing previous cases of smuggling.

Nvidia, however, told CNBC, "American firms should focus on innovation and rise to the challenge, rather than tell tall tales that large, heavy, and sensitive electronics are somehow smuggled in ‘baby bumps’ or ‘alongside live lobsters".

Given the antagonism between America and China regarding advancement in AI (as shown by the likes of DeepSeek), Anthropic, as an American AI company, has an investment in America being the dominant leader in AI growth. As cited at the bottom of Anthropic's blog post, Anthropic's leaders have previously argued America's "shared security, prosperity and freedoms hang in the balance", in regards to wider AI support and adoption.

On the way to a recent White House event Jen-Hsun Huang has spoken about the need to "accelerate the diffusion of American AI technology around the world." That's Nvidia's CEO arguing for getting more 'American' AI chips out into different territories, which could kinda be on the same side of the argument as Anthropic. Especially as he also later stated that: "China is right behind us," which is also seemingly leaning into that whole secure nationhood stuff, too.

This is one step in a large argument made by companies concerning their competition with China. Just a few months ago, OpenAI argued it should be allowed to scrape copyrighted content as it would lose out to China otherwise.

Anthropic, like many other major AI companies, is reliant on the hardware of Nvidia to operate in wider AI workloads. The examples it brings up are from chip smugglers in the last few years, but it doesn't argue that these specific examples are how smugglers are beating detection right now. In this sense, Anthropic is broadly gesturing at a perceived problem with smuggling to justify tightening restrictions and enforcement in light of the Diffusion Rule.

Nvidia is hand-waving that concern with its response, but the incredulity in the messaging does seem a tad strange, given these were previously successful smuggling techniques, albeit not specifically of Nvidia products. Anthropic has not given any evidence of any relevant or ongoing smuggling techniques as of the time of writing.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Not that this is a particularly big surprise, given the RX 7900 GRE was also a China-exclusive card to start with, although it did become available in limited quantities to the rest of the world eventually.

Anyway, the RX 9070 GRE has 48 Compute Units, a boost frequency of up to 2,790 MHz, 48 Ray Tracing Accelerators, 96 AI Accelerators, and 96 ROPS, with 3,072 Stream Processors in total (via Videocardz). Oh, and 12 GB of GDDR6 VRAM over a 192-bit bus, which is spookily close (by which I mean, bang on accurate) to the leaked specs we reported on last week.

AMD also says that the RX 9070 GRE is 6% faster than the RX 7900 GRE on average across 30+ games at native 1440p Ultra, which is a bit underwhelming in my book. Not that I'd consider that performance to be slow, exactly, but I can't help but feel it's a roundabout way of saying "slower than the RX 9070 and RX 9070 XT." Which is to be expected, of course, but still.

The pre-order price is listed at 4,199 RMB. That equates to around $588 at today's exchange rates, although it's difficult to do a direct comparison with a Chinese pre-order price versus current Western market GPU prices, given that most models are still yo-yoing around in pricing and availability.

One thing's for sure, though: It's currently slotting in as the least-powerful card in AMD's latest generation lineup—although, should it become available in western markets for a sensible price tag, it might stand a chance of giving the RTX 5070 something to think about.

Still, we're getting towards the sort of time we expect to see an RX 9060 XT release, which will hopefully flesh out the RX 9000-series desktop range good and proper. Computex 2025, perhaps? It's not long now until I pack my bags for Taiwan once more, so I'm hoping AMD might have something a little more exciting (or at least, available) to show off there.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Once again, it's X user Haze2K1 and the shipping data website NBD that's furnishing us with an insight into Intel's graphics machinations. But this time, instead of the G31 GPU itself popping up in the data, it's supporting components for the chip, including socket stiffeners (ooo, er!) and cylindrical multicontact connectors, whatever they are.

The shipments were to Intel's Vietnam facility, where Haze2K1 claims the company's reference-design Limited Edition boards are produced. You could take from that, should you wish to be optimistic, something along the lines of Intel making preparations to start production on a line of G31-based gaming graphics cards.

But hang on, even if that's true, exactly what can you expect from G31? As I explained last time around, Intel's latest Arc B580 and B570 GPUs use the BMG-G21 chip and the bigger G31 GPU has long been rumoured as the next step up in the Battlemage hierarchy.

There's no official information, but the rumour mill has settled on 32 execution units (EUs) for G31, fully 60% more than the 20 EUs of the existing Intel Arc B580. Going by the raw performance of the B580, you might then expect performance up at Nvidia RTX 5070 levels or even beyond.

In practice, that seems pretty ambitious. But if G31 can at least get near the 5070, well, that would be pretty exciting, especially if Intel retained its typically aggressive GPU pricing strategy. Hence, my speculation a few weeks ago that G31 could have the makings of a $400 RTX 5070 killer.

Of course, you have to wonder why, if Intel is indeed planning to release a G31-based graphics card, it's taking so long, what with the B580 launching last December. There's been some talk that G31 suffers even more acutely from the excessive CPU load that has compromised the appeal of the Arc B580 board. But ultimately, we just don't know.

That said, if Intel could get a G31-based graphics card out in 2025, it should have over a year to win customers. We wouldn't expect properly new mid-range GPUs from Nvidia until spring 2027. Likewise, AMD's RDNA 4-based GPUs, including the RX 9070 and RX 9070 XT are pretty new on the market, with the RX 9060 series not even on sale yet.

The bottom line is that there's still time for Intel to make an impact. So, get those fingers and toes crossed and keep watching this space.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Nope. Certainly far from in the clear.

If you can wait to buy a graphics card, my advice is that you absolutely should. It's the wild, wild west out there right now and, if you're not desperate, it's better to wait it out.

Can't wait any longer? Maybe your current card's given up the ghost or you fear you don't have the graphical grunt to run Elden Ring Nightreign at a decent lick. Fair enough, but just know that it won't be easy. If refreshing Amazon every five seconds or camping outside your local retailer to try and score a cheap GPU deal is not your thing, I've got you.

I've rounded up the five GPUs I'd buy right now if I had to buy one, so you can waste your money efficiently and overpay just a bit instead of a lot. Don't say I didn't warn you.

Quick links

- Nvidia RTX 4060 | $380 at Newegg | ?249 at Amazon UK

- Nvidia RTX 5070 Ti | $900 at Newegg | ?808 at Amazon UK

- AMD RX 7800 XT | $580 at Newegg | ?461 on Amazon UK

- Nvidia RTX 5060 Ti | $530 at Amazon | ?450 at Amazon UK

- Nvidia RTX 5070 | $604 at Newegg | ?519 at Amazon UK

Nvidia RTX 4060

MSI RTX 4060 Ventus 2X Black OC | 8 GB GDDR6 | 128-bit | 3072 CUDA cores | 2.46 GHz boost clock | 115W | $380 at Newegg | ?249 at Amazon UK

I know that last-gen cards aren't particularly exciting anymore, not when we're all drooling over the likes of the RTX 5090 (which, by the way, apparently can't run Oblivion Remastered at a steady 60 fps. Go figure). But I didn't promise you excitement; I promised you GPUs at prices you'd be able to stomach, and that's why the RTX 4060 is at the top of the list.

With upscaling and DLSS 3, you'll still run most games at high settings at 1080p. Can the RTX 4060 handle 1440p? Yeah, it can, but you'll need to be conservative with the graphics settings.

In our RTX 4060 review, you'll find that the card can comfortably maintain 60 fps in most games even if you drive the settings all the way up to max. Cyberpunk 2077 on ultra with ray tracing on is not a thing for this card, though, at least not without DLSS 3 and Frame Generation.

Going last-gen has one glaring downside: No DLSS 4. You can't quadruple your frame rates with Nvidia's Multi Frame Generation. But, seeing as you can still double them, I say you'll be fine for a few years.

Price-wise, I'm sorry to report that the RTX 4060 is, like every other card, selling above MSRP. Like I said, the GPU market is awful right now. But, I found one for sale for $380 at Newegg (or ?249 for us lucky Brits) and that's as good as it gets right now. View Deal

Nvidia RTX 5070 Ti

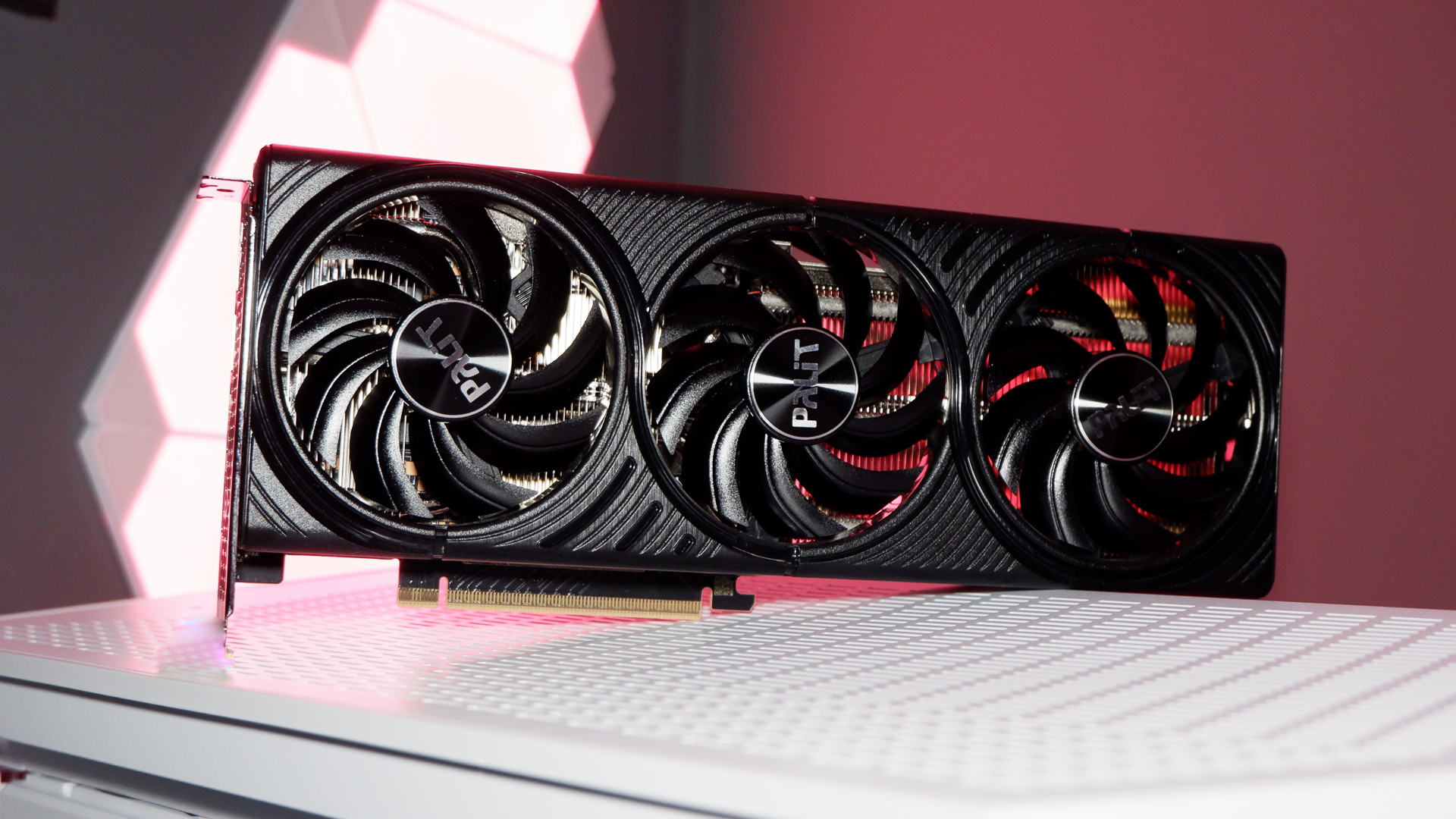

MSI Ventus 3 | 16 GB GDDR7 | 256-bit | 8960 CUDA cores | 2.45 GHz boost clock | 300W | $900 at Newegg | ?808 at Amazon UK (Palit card)

For gamers with deeper pockets that don't quite go all the way to the RTX 5090, the RTX 5070 Ti is the only option right now. With the RTX 5080 selling for $1,280 and up on Amazon, you don't have much of a choice.

Back to the RTX 5070 Ti. Polarizing graphics card, that one.

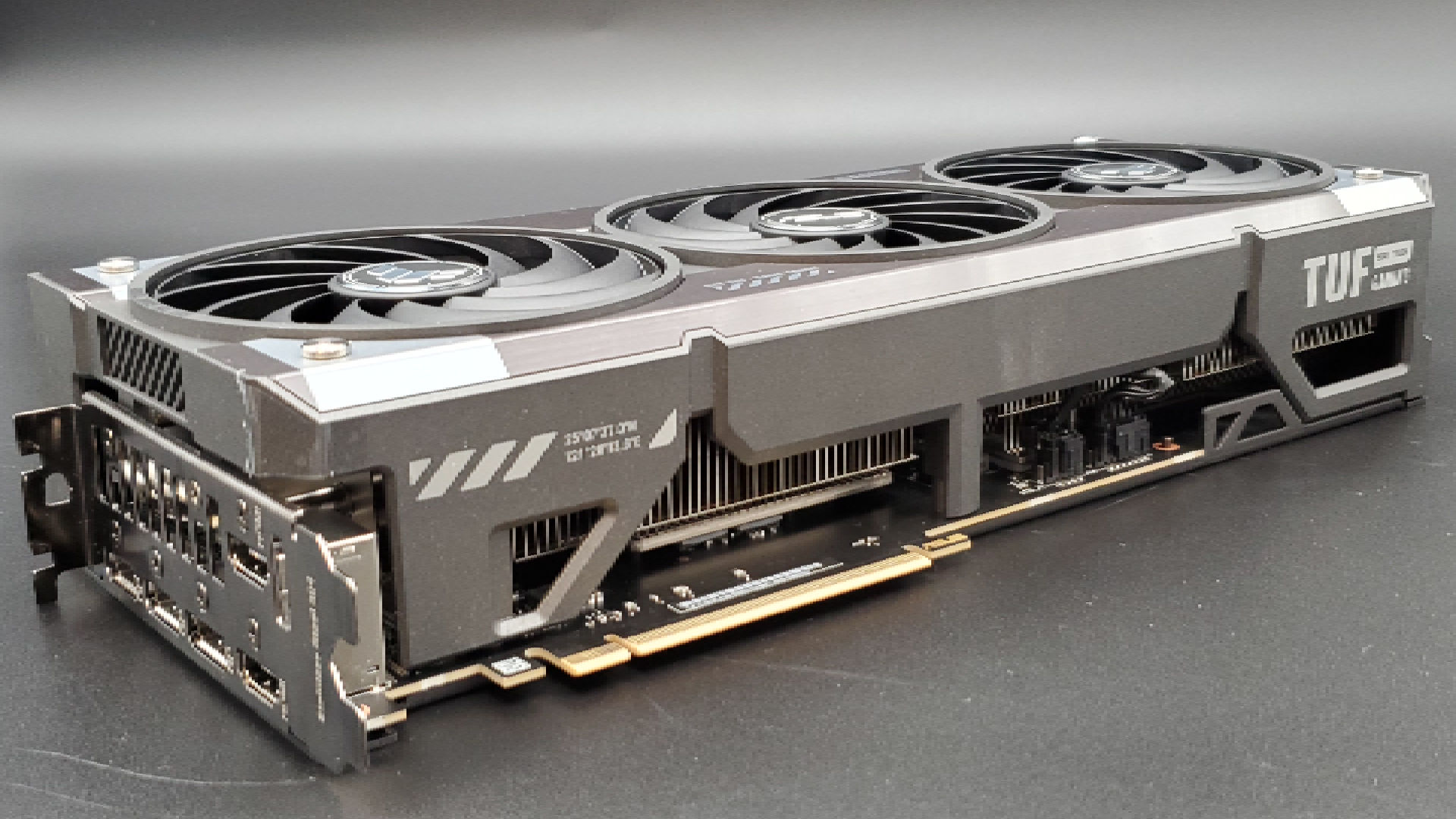

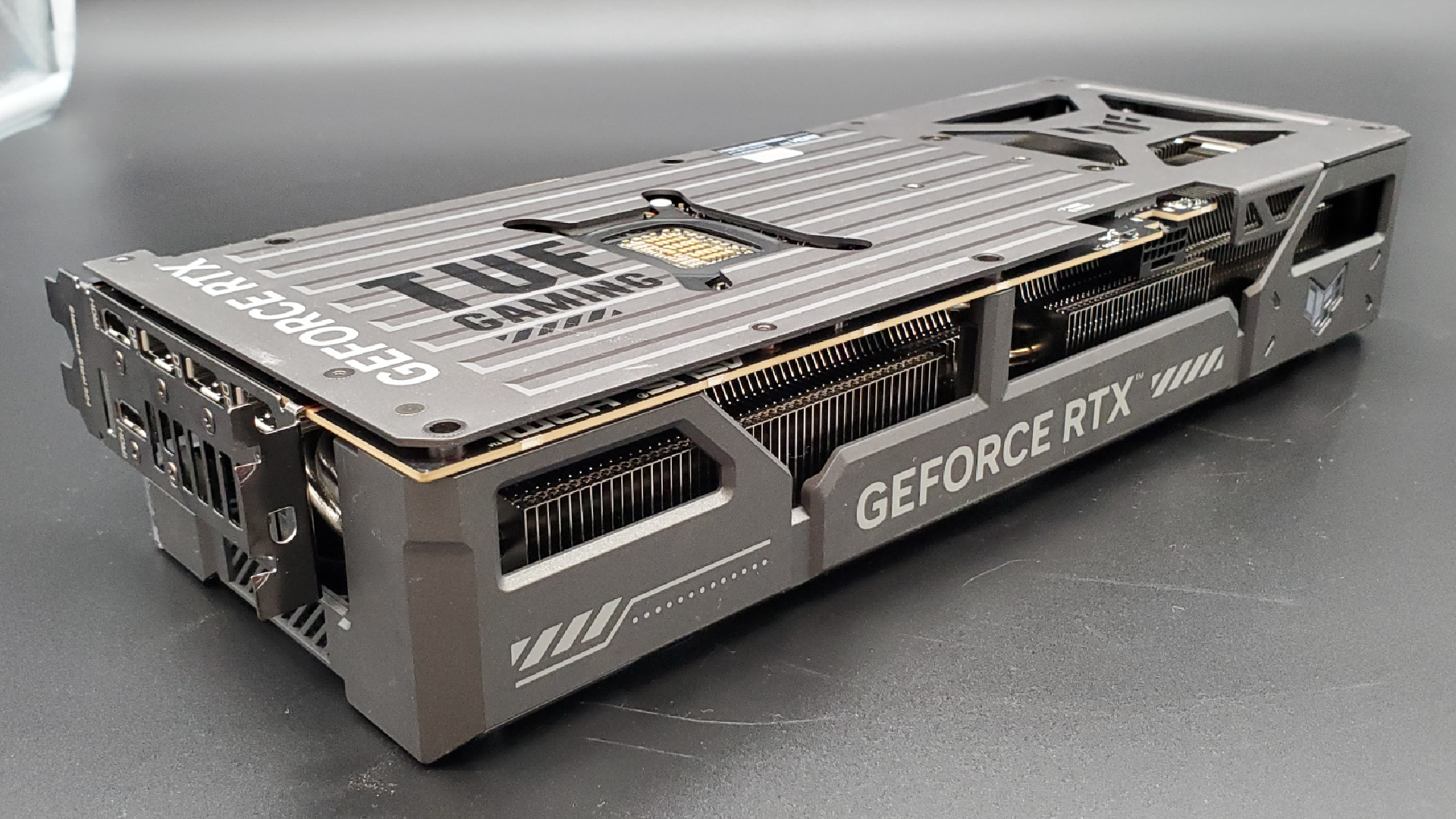

PC Gamer's reviewed two different models of it, the Asus TUF Gaming RTX 5070 Ti and this MSI Ventus 3X RTX 5070 Ti, and while one scored a hefty 86 out of 100, the other one fares much worse with a 68 score. It's not that one was great and the other one was not; it's all about the price.

If we just close our eyes and pretend for second the pricing issue isn't a thing (mmm, what a world...), the RTX 5070 Ti is a decent GPU. It can almost match the RTX 5080 with the right overclock.

The RTX 5070 Ti is roughly 20% faster than the RTX 4070 Ti Super, and around 15 to 20% slower than the RTX 5080. In our 1440p benchmark, it scored between 61 fps (Cyberpunk 2077 with ray tracing on ultra settings) and well above 100 fps in games that don't make a mockery out of every GPU. It's brilliant at 1440p, good enough at 4K, and overkill at 1080p.

The RTX 5070 Ti is also overpriced, no surprise there. The cheapest one I spotted was $900 at Newegg, and it also gets you Doom: The Dark Ages for free, which normally costs $100. If you're over on this side of the pond, Amazon UK sells the RTX 5070 Ti for ?807.View Deal

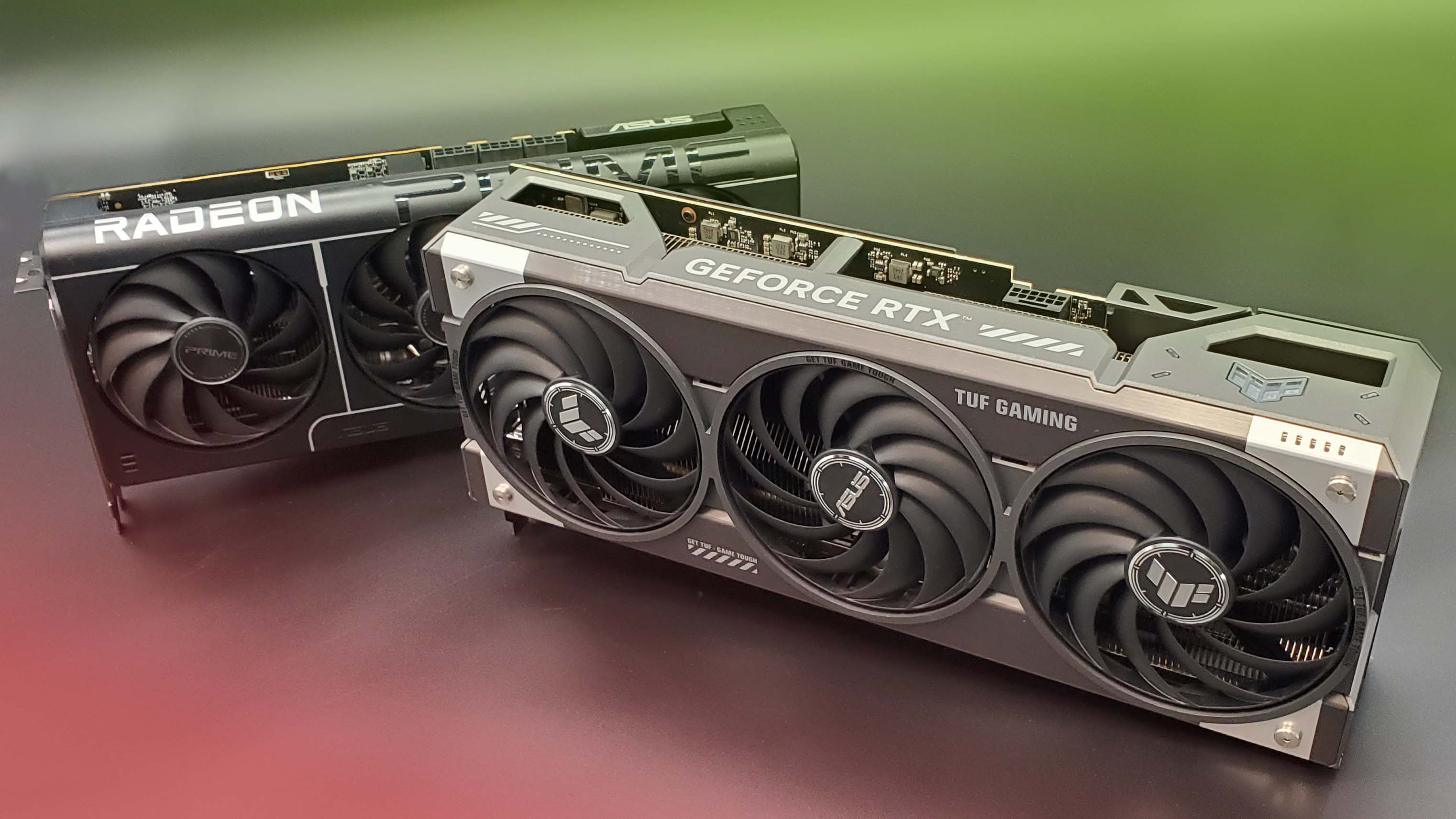

AMD RX 7800 XT

Sapphire Pulse RX 7800 XT | 16 GB GDDR6 | 256-bit | 60 compute units | 2.43 GHz boost clock | 263W | $580 at Newegg | ?461 on Amazon UK (XFX card)

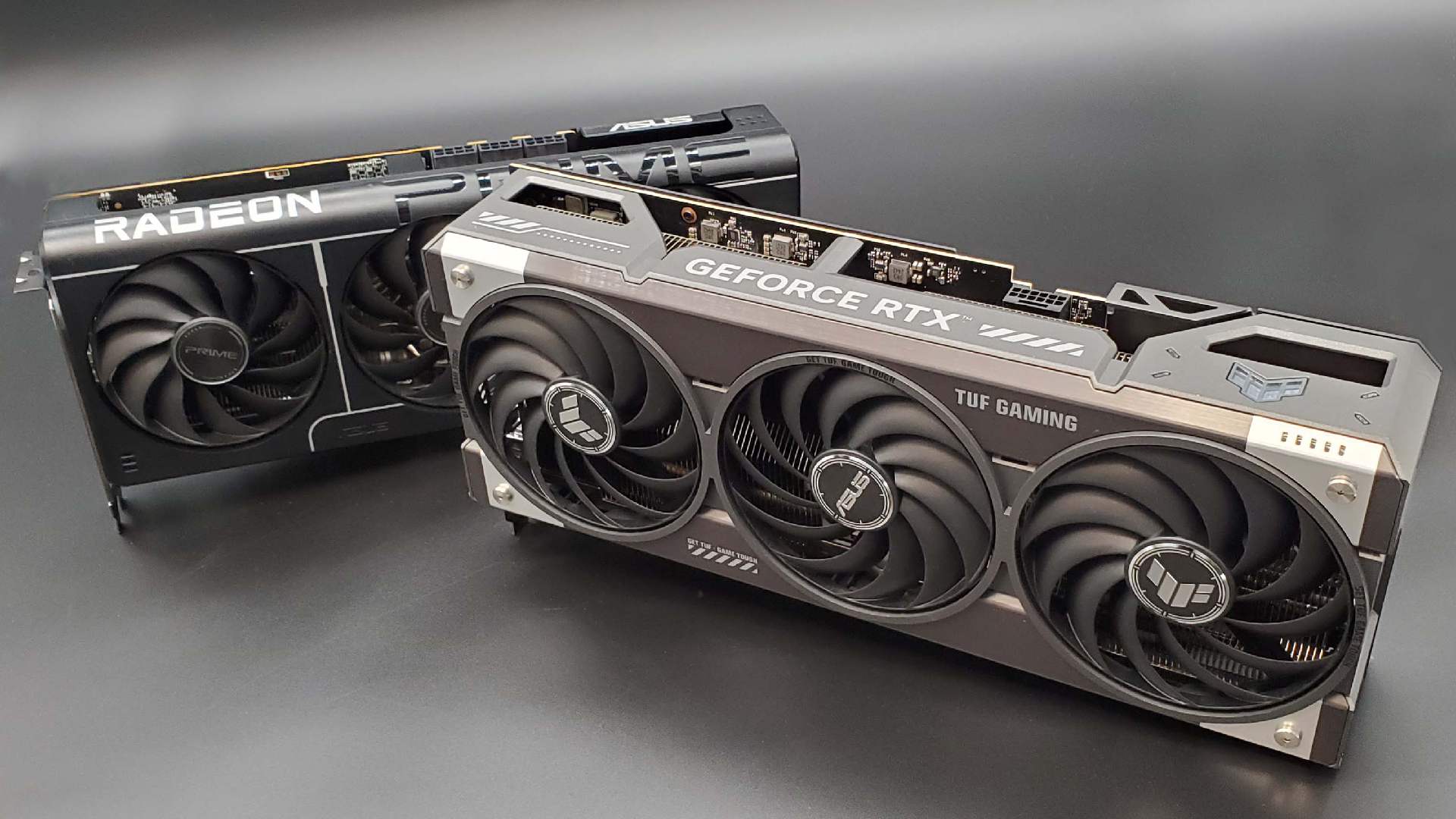

Listen, I'm a sucker for AMD, and I love the RX 9070 XT. I just don't think you should buy one right now, so I'm still recommending the RX 7800 XT instead.

The RX 9070 XT is one frustratingly pricey affordable GPU these days. Its big selling point was that it was massively undercutting the Nvidia competition and delivering on gaming performance. Scarcity and opportunism, however, has bumped the $599 MSRP to the moon. The absolute cheapest option on Newegg is $859, and just typing that is upsetting. At that price point, just get the RTX 5070 Ti, which wins in ray tracing and offers MFG.

The RX 7800 XT is an acceptable alternative if you're willing to forgo (some of) that fancy-schmancy ray tracing stuff. And if not, I've got two more Nvidia options for you below, so what are you still doing here?

The RX 7800 XT didn't break any records when it first launched, but it offered top-notch value for the money, and even now, when it costs $580 at Newegg and $590 on Amazon (or ?461 on Amazon UK), that side of it is still true. When we look at pure rasterization, this is a GPU that rivals the RTX 3080 and the RTX 4070, and you'll generally find it for less than either.

A 1440p card through and through, the RX 7800 XT delivers some of its best work at that resolution—although it certainly wouldn't mind if you scaled down to 1080p. At 1080p, it easily hits 60 fps in every game bar Cyberpunk 2077; at 1440p, it gets absolutely obliterated by the dystopian blockbuster, huffing and puffing as it averages 27 fps with ray tracing on. Outside of Cyberpunk, it's an admirable GPU, and it hits anywhere from 60-ish to 120-ish fps in every other game in our test suite.View Deal

Nvidia RTX 5060 Ti

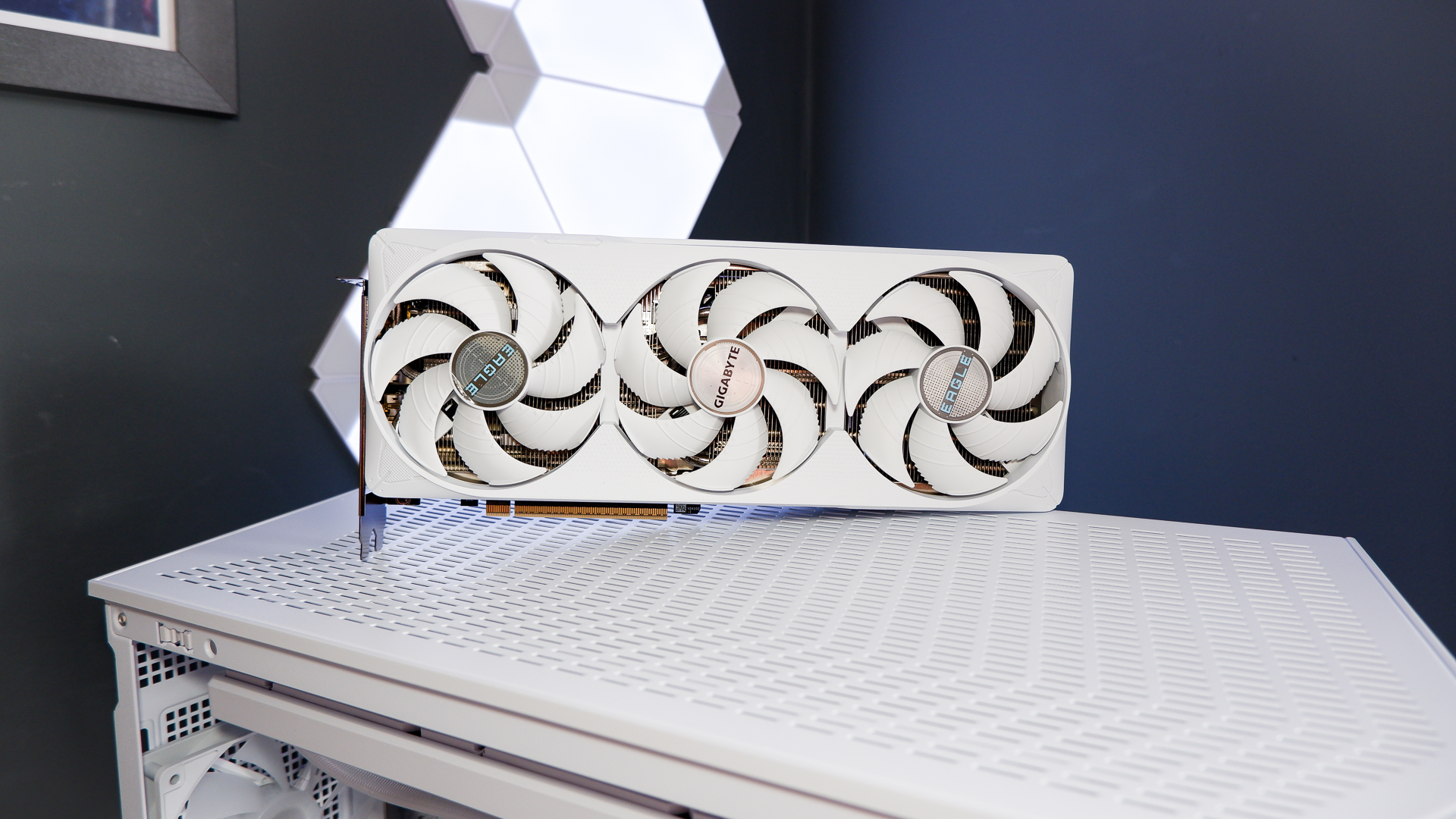

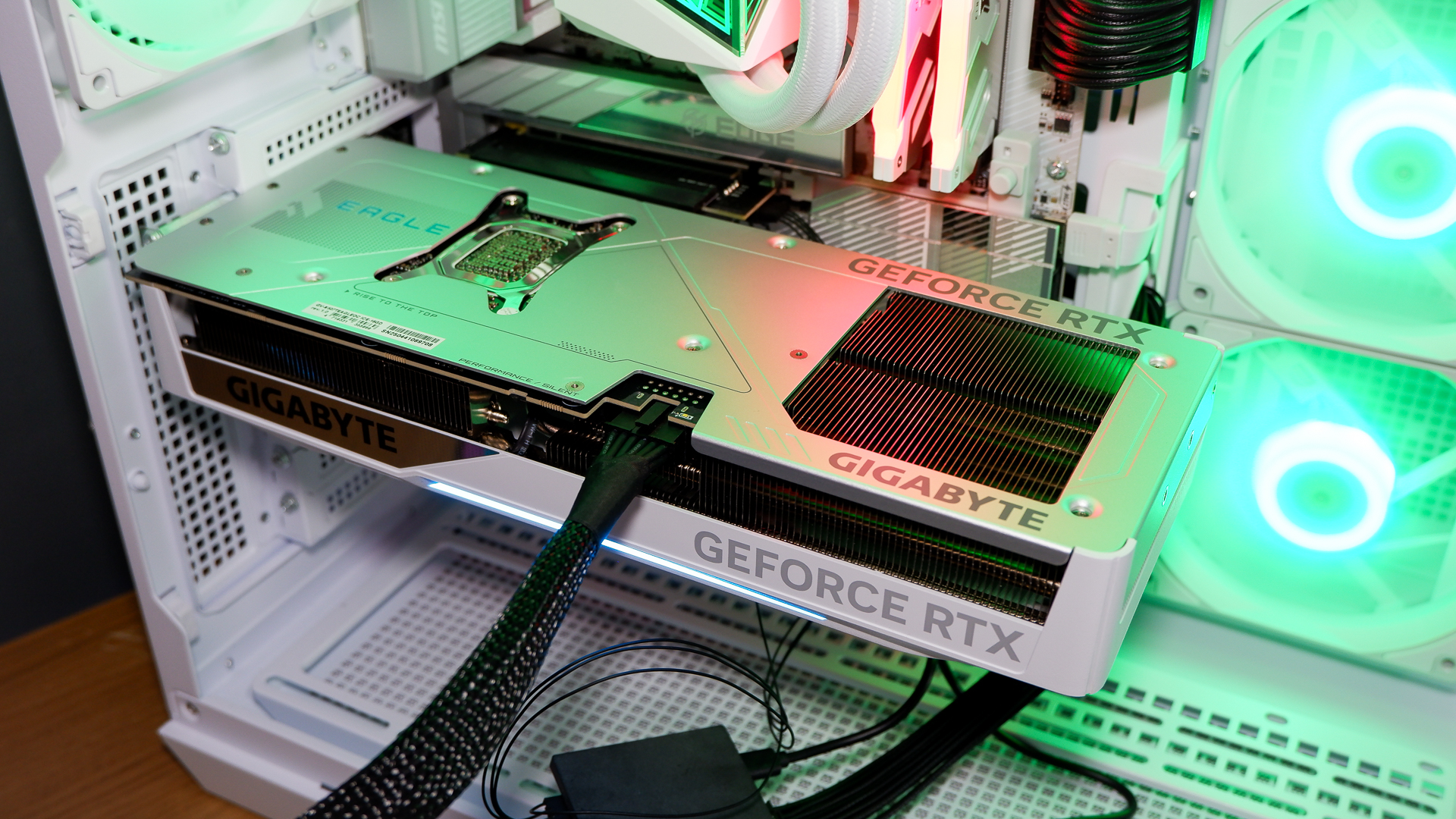

Gigabyte RTX 5060 Ti Gaming OC | 16/8 GB GDDR7 | 128-bit | 4608 CUDA cores | 2.57 GHz boost clock | 180W | $530 at Amazon | ?450 at Amazon UK (MSI card)

I admit that I had low hopes for the RTX 5060 Ti. After many hours spent ranting about the stupidly narrow memory bus on the RTX 4060 Ti and how close those two GPUs were to the RTX 3060 Ti (don't even get me started), I have come to accept that Nvidia is doing the same thing yet again. That's what they want, right?

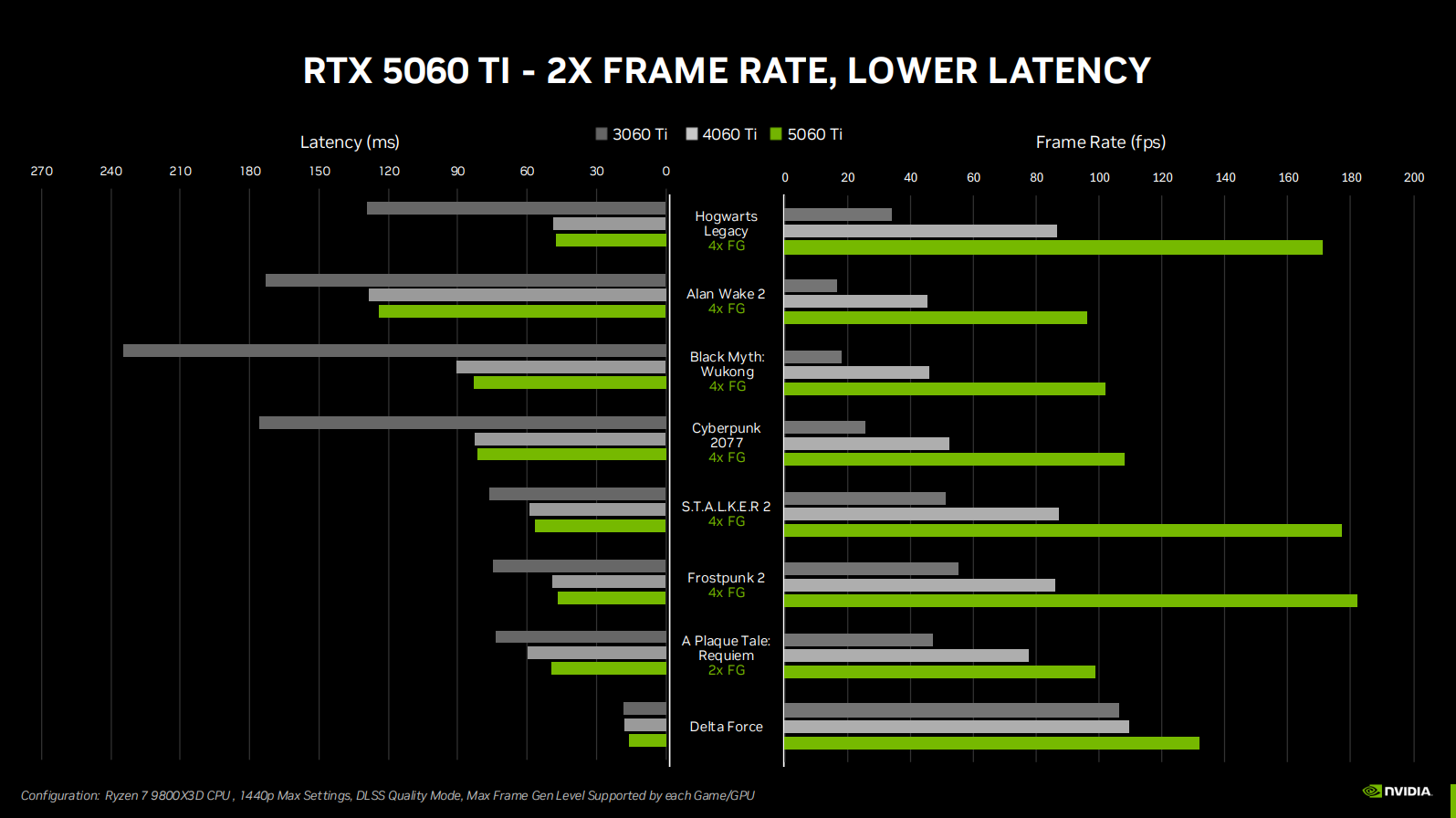

Imagine my disappointment then, when all my whining was for nothing, and the RTX 5060 Ti actually turned out to be a decent GPU? Preposterous.

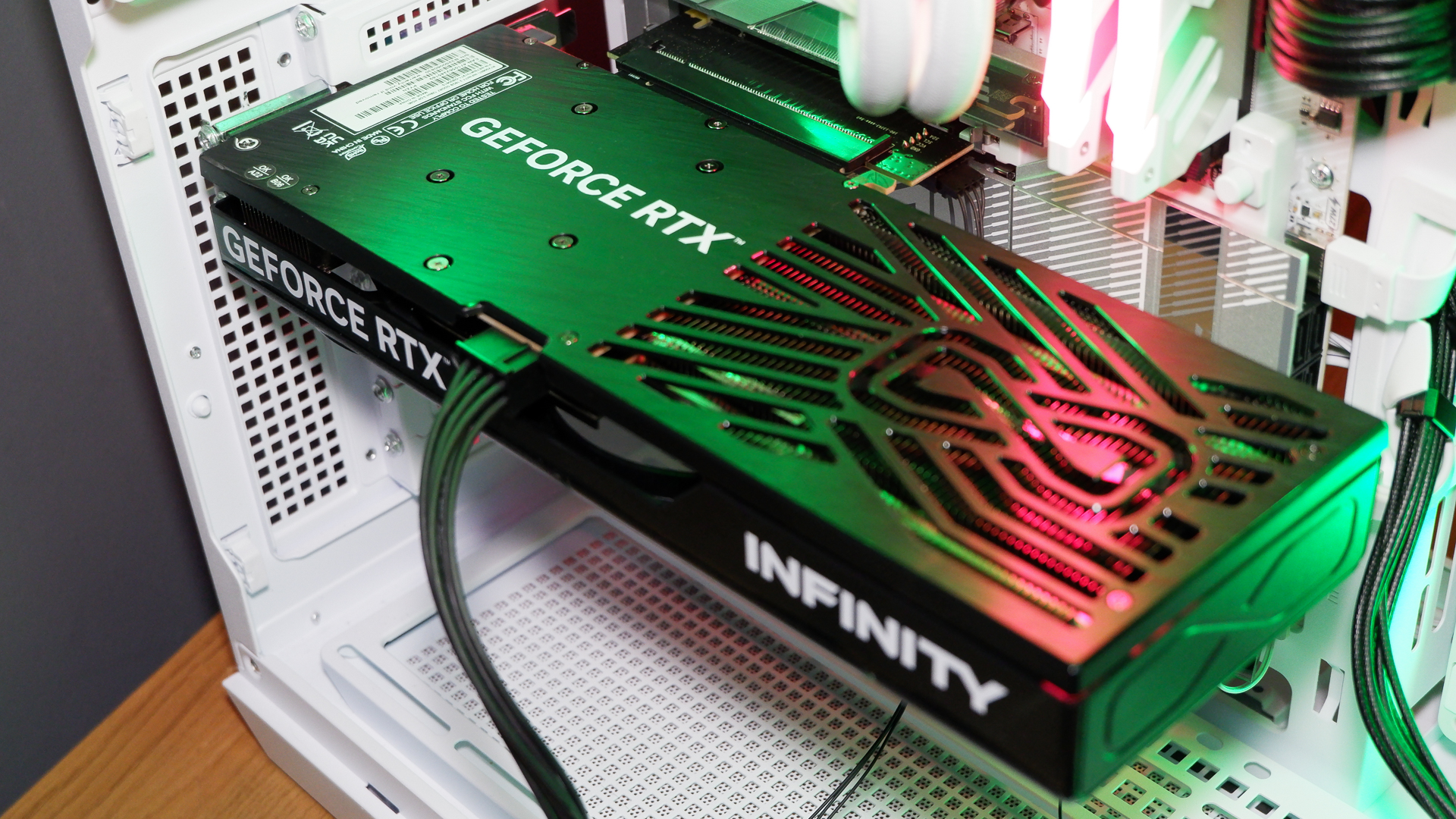

We took the Palit Infinity 3 RTX 5060 Ti 16 GB and the MSI Gaming Trio OC RTX 5060 Ti 16 GB out for a spin, and found that the former was 20% faster than the RTX 4060 Ti. This is a 1080p card, but reach into Nvidia's bag of goodies (aka DLSS and Multi Frame Generation) and you can pull off 1440p. If you force it to run at 4K, you'll find that the extra bandwidth gained from using speedier GDDR7 RAM actually puts it 40% ahead of the last-gen GPU.

Performance-wise, I'm pleased; price-wise, this GPU is stuck in the same hellscape we're all living in right now. The cheapest one I've found is this Gigabyte Gaming OC 16 GB model, priced at $530 (or an MSI Inspire 2X model for ?450 in the UK).

The 8 GB version of the RTX 5060 Ti finds itself adrift in the current Nvidia lineup. You can buy one for $420 (?360), but the question is, should you? At 1080p, absolutely go for it. Anything beyond and you're better off with the 16 GB version.View Deal

Nvidia RTX 5070

Gigabyte WindForce RTX 5070 | 12 GB GDDR7 | 192-bit | 6144 CUDA cores | 2.51 GHz boost clock | 250W | $604 at Newegg | ?519 at Amazon UK (Palit card)

While the RTX 5060 Ti was a pleasant surprise, the RTX 5070 sort of disappointed me. But it's only just a bit pricier than the RTX 5060 Ti right now, and that gives it some merit.

This GPU is considerably slower than the RX 9070 XT, so if both were sold at MSRP, I'd be sending you a whole bunch of links to buy the AMD flagship instead. It's only around 13% faster than the RTX 4070 Super, but the access to MFG elevates it to a whole new level, unless you're not a fan of them 'fake frames' that is.

Nvidia's CEO Jensen Huang promised us RTX 4090-level performance with this one. Well, we're only about 80% behind that figure at 4K, so he was almost right. Kind of.

But if you compare it to cards in a similar pricing bracket, the RTX 5070 is not too bad. It's just not outstanding, but at $604 (?519), I'll take it.

Given the lacklustre gen-on-gen improvement, you might as well get the RTX 4070 Super, but that old GPU is actually pricier right now. The RTX 5070 quietly slides onto this list with no applause, and no fanfare, but as a means to an ends during a rough market.View Deal

My advice? It's got to be patience, unfortunately

If you're itching to get yourself a new GPU, I feel your pain. I was stuck in that same limbo back in 2022, with an aging PC that cried quietly when I tried to play Elden Ring. There were just no GPUs to be had, and my poor GTX 1060 had to keep on keepin' on until I finally sent it where GPUs go to retire in 2024.

I waited it out. So should you.

The problem, right now, is twofold. One: GPUs are almost never sold at MSRP, knocking down the value for money aspect. Two: Even if they're ever sold at a reasonable price, they're also quick to sell out. Okay, I lied, because here's… Three: There are no last-gen cards available at MSRP either.

If you've got an unlimited budget and a GPU that's currently on fire as we speak, go ahead and buy one of the five graphics cards I listed above. Otherwise, my recommendation is patience.

The prices are wild, but they're getting better. It's already possible to score a graphics card near MSRP in the UK, and hopefully, the US market will catch up eventually. Until then, keep pushing your old GPU to do its best. I hear words of encouragement don't work, but it never hurts to try.

]]>That's coming from Benchlife, which says that, despite recent rumours to the contrary, "we have reliable sources telling us that there is currently no plan to stop supply or cancel it. As for the news from the market, it is just a rumour. The main reason is as mentioned earlier, it is entirely due to the reaction to the GeForce RTX 5060 Ti" (machine translated).

It's true, as Benchlife says, that Nvidia only sent out 16 GB versions of the card for review. Plus, there's the fact that some early RX 9060 XT listings that showed up were all 16 GB versions. However, it's unclear why these two facts would make people assume that AMD would cancel the 8 GB version entirely.

Whatever the reason, according to Benchlife's sources, we can still expect an 8 GB version in addition to a 16 GB version. We're hoping these will get announced at AMD's Computex press conference towards the end of this month. AMD specifically mentioned gaming in its announcement of this press conference, so fingers crossed.

Despite all that people like to decry 8 GB of VRAM as not enough for gaming in 2025, with prices being what they are, a budget 8 GB card from AMD might be just what's needed to spice the market up at the low end. Plus, we already know that even some big AAA games are fine with just 8 GB of VRAM—it's all game-dependent.

That being said, everything will, as always, depend on stocks. Without ample stocks, AIBs can charge what they like and people will still presumably lap it up.

As for what to expect in terms of performance, we have some indication that it'll be about half as powerful as the RX 9070 XT. Although this isn't official, recent leaks have it that the RX 9060 XT will have the same number of shader cores and whatnot as the RX 7600 XT, but with a higher boost clock.

Plus, of course, you'll be getting all that sweet RDNA 4 goodness, including FSR 4 upscaling and frame gen. Which, I might add, is a pretty impressive generation of that tech that all but closes the gap on Nvidia. For more speculation about the actual performance of the RX 9060 XT, though, you can check our Jeremy's story linked above, as he gives a good picture of what we might be in store for.

With an RX 9060/9060 XT launch seemingly just around the corner, the RTX 5060 being "available in May", and even RTX 5060 laptop GPU benchmarks starting to surface, it's looking like the lower end of the GPU market might finally—finally—be kicking into gear. I'll take more options over less any day of the week, 8 GB or otherwise.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

As reported by VideoCardz (and attributed to a post on Weibo), a Japanese shop has taken to informing potential customers it will not sell RTX 5090 and RTX 5080 GPUs to those looking to take them outside of Japan.

A memo was spotted underneath the sign for a Zotac RTX 5090 in an electronics store in Osaka, which, when roughly translated with Google, says "RTX 5090/RTX 5080 is only sold to customers for use in Japan. If the purchased product is to be taken out of Japan, it will not be sold."

The Zotac RTX 5090 in said electronics store sells for ?452,800, which equates to roughly $3,170. This price is inclusive of sales tax, though those with foreign passports can apply for tax-free shopping at many retailers when paying over ?5,000 ($30).

Some tourists may opt to buy their GPUs this way, both for the lack of sales tax and to take advantage of dips in the price of Yen. Presumably, this store has seen quite a few tourists picking up cards to bring home or to sell, as it's cheaper (or more readily available) to pick up than at home.

However, no information is given on how exactly this policy could be enforced. It doesn't specify tourists, so a test wouldn't quite work, and the likelihood of a store asking for proof of residence before allowing someone to purchase an item is quite slim. This is before mentioning that a Japanese resident could feasibly purchase a card for a potential buyer and give it to them outside of a store—you know, like a teenager chancing their arm at getting alcohol to impress their friends.

Back around the launch of the RTX 30-series cards, UK electronic seller, Overclockers UK, halted sales to the US due to high demand, and recent tariffs have also stopped RTX 50-series sales shipped to the US, but you can still buy any card in the UK and bring it across should you want to. This new policy in Japan is quite different as it is about stopping customers from buying in the physical shop, specifically.

According to VideoCardz, some Japanese stores opted to deny customers looking to buy GPUs without sales tax, but tourists still bought the cards at full price. Some tourists reportedly found it was cheaper to fly to Japan and buy a card than buy it in their home country, even at an inflated price with included sales tax.

Still, the store is likely putting a metaphorical line in the sand here, even if it feels very hard to enforce in any real way.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Yup, Nvidia will sell you such a card, and it's not based on an irrelevant GPU built specifically for AI processing. In fact, the Nvidia RTX Pro 6000 Blackwell Workstation Edition uses the same GB202 chip as the mighty GeForce RTX 5090. Except the RTX Pro 6000 gets a very nearly fully enabled GB202 GPU with a ridiculous 24,064 CUDA cores, up from the feeble 21,760 cores of the RTX 5090.

Add in that 96 GB of VRAM, three times the 32 GB you get with the 5090, and you have an absolute beast of a video board. It's the ultimate in future-proofing, surely?

Well, possibly, but there are a couple of pifflingly minor caveats. First, the RTX Pro 6000 has an MSRP of $8,565. That's not great value considering the RTX 5090 with the same GPU, albeit slightly cut down, has an MSRP of $1,999. Even if you factor in the $3,000 that 5090s tend to go for in the real world, you're paying awfully heavily for that extra 64 GB of VRAM.

The other snag is that, despite the fact that the RTX Pro 6000 uses exactly the same gaming-optimised GB202 silicon as the RTX 5090, it doesn't have the same gaming-centric drivers. In reality, it could be a fair bit slower than an RTX 5090 in games.

That's despite a higher peak boost clock of 2,600 MHz to the 5090's 2,407 MHz. The RTX Pro 6000's base clock is actually around 400 MHz lower than the 5090 at 1,590 MHz. All with a 600 W TDP.

Moreover, if you could somehow wangle an RTX 5090 at MSRP, then do the same with its successors, presumably the RTX 6090 and RTX 7090, you could probably enjoy all three over a six year period for near enough what you're paying for the RTX Pro 6000.

As for that rumoured RTX 5070 Super with 18 GB, we're talking about a mere forum post referring to such a card. So, it's the most wafer-thin of rumours. But it does make sense.

There's a new variant of GDDR7 memory with 3 GB per chip, which would allow for 18 GB on the RTX 5070's 192-bit memory bus. Indeed, it's those 3 GB chips which allow Nvidia to create a 24 GB RTX 5090 laptop GPU with a 256-bit memory bus, where you'd normally expect 16 GB.

Likewise, an 18 GB RTX 5070 Super would sit more easily in the GeForce range now that Nvidia has released the RTX 5060 Ti with an optional 16 GB variant. It's a little odd to find the $429 RTX 5060 Ti 16 GB is cheaper than the $549 RTX 5070 with just 12 GB.

Whether Nvidia actually releases an RTX 5070 Super with 18 GB, we'll have to wait and see. At the right price, it could be a decent long-term investment. But expecting an Nvidia GPU at the right price is typically an exercise in frustration.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

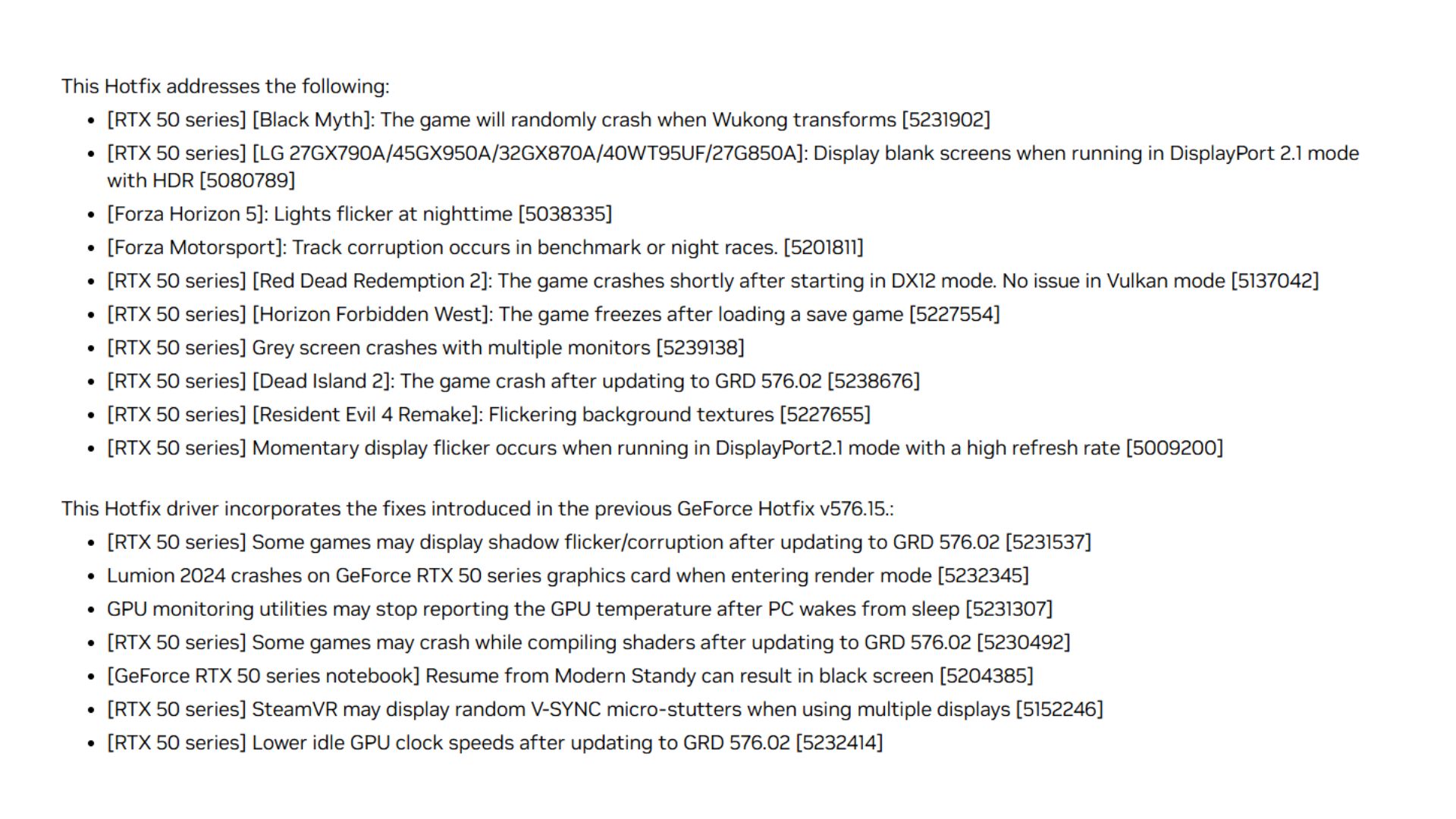

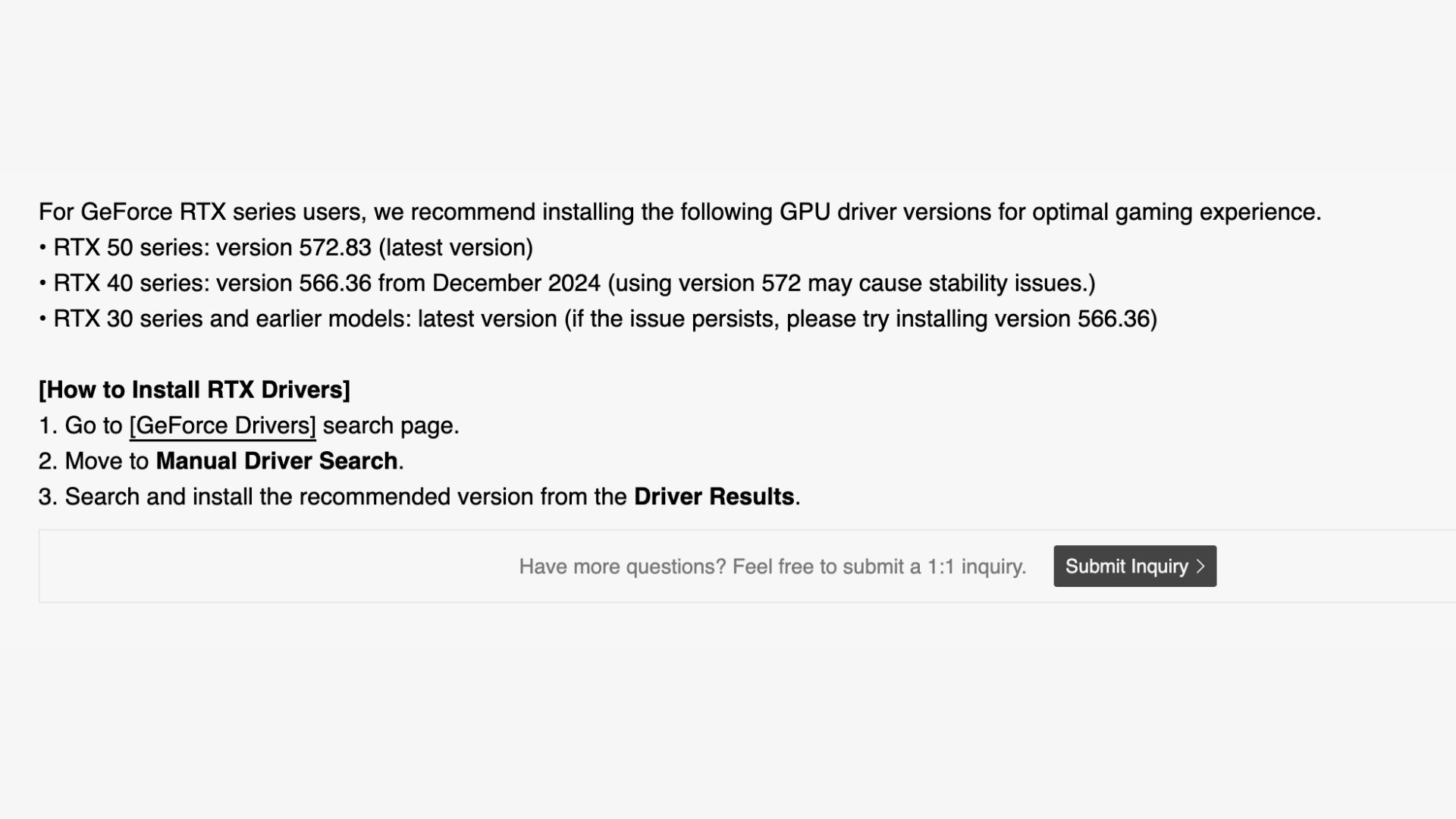

GeForce hotfix display driver version 576.26 has just launched, offering 10 additional fixes on top of version 576.15, which launched April 21. This solves problems found primarily with RTX 50-series cards in games, such as Black Myth: Wukong, Red Dead Redemption 2, and Horizon Forbidden West. However, issues were also spotted with LG monitors and problems tracking GPU temperature after waking PCs from sleep mode.

Currently, in the Nvidia App, you can only download version 576.02 as part of the drivers tab, which was released alongside the launch of the RTX 5060 Ti on April 16. This is because hotfixes aren't published to the app and are something users have to specifically seek out if they want to enable them.

Nvidia says in its latest driver support page (with direct links to download the hotfix): "These fixes (and many more) will be incorporated into the next official driver release, at which time the Hotfix driver will be taken down. To be sure, these Hotfix drivers are beta, optional and provided as-is."

One commenter says that the latest driver has fixed problems they saw with connecting to their LG monitor via DisplayPort 2.1 with HDR enabled. Another comment claims that the Horizon Forbidden West freeze fix did not actually solve the crashing issues they have been having.

Nvidia feedback threads are the dedicated spot for, as you might be able to guess, giving feedback, so it's certainly nothing new to see negative responses in them. However, with hundreds of comments, the early feedback on the latest hotfix is looking pretty mixed at this time.

Looking through the Nvidia Customer Care X account, plus the Nvidia feedback threads, hotfixes are a relatively rare update for Nvidia, historically. Despite this, both versions 576 and 572 in the last few months had two separate sets of hotfixes. Back in December, version 566 got a single hotfix, and so too did Nvidia Broadcast in June last year. The last hotfix publicised on the Nvidia Customer Care page before that was on February 28, 2024.

Nvidia does clarify that hotfixes are "run through a much abbreviated QA process" and that "the sole reason they exist is to get fixes out to you more quickly." For a cleaner, safer upgrade process, Nvidia recommends waiting for a WHQL-certified driver.

This is where drivers go through the appropriate channels and are tested by third parties. When a driver makes it to the Nvidia App, it's a guarantee that it has been properly tested. Though, evidently, not necessarily a guarantee that it will definitely work on your specific system

We should expect to see the full driver update in the near future.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

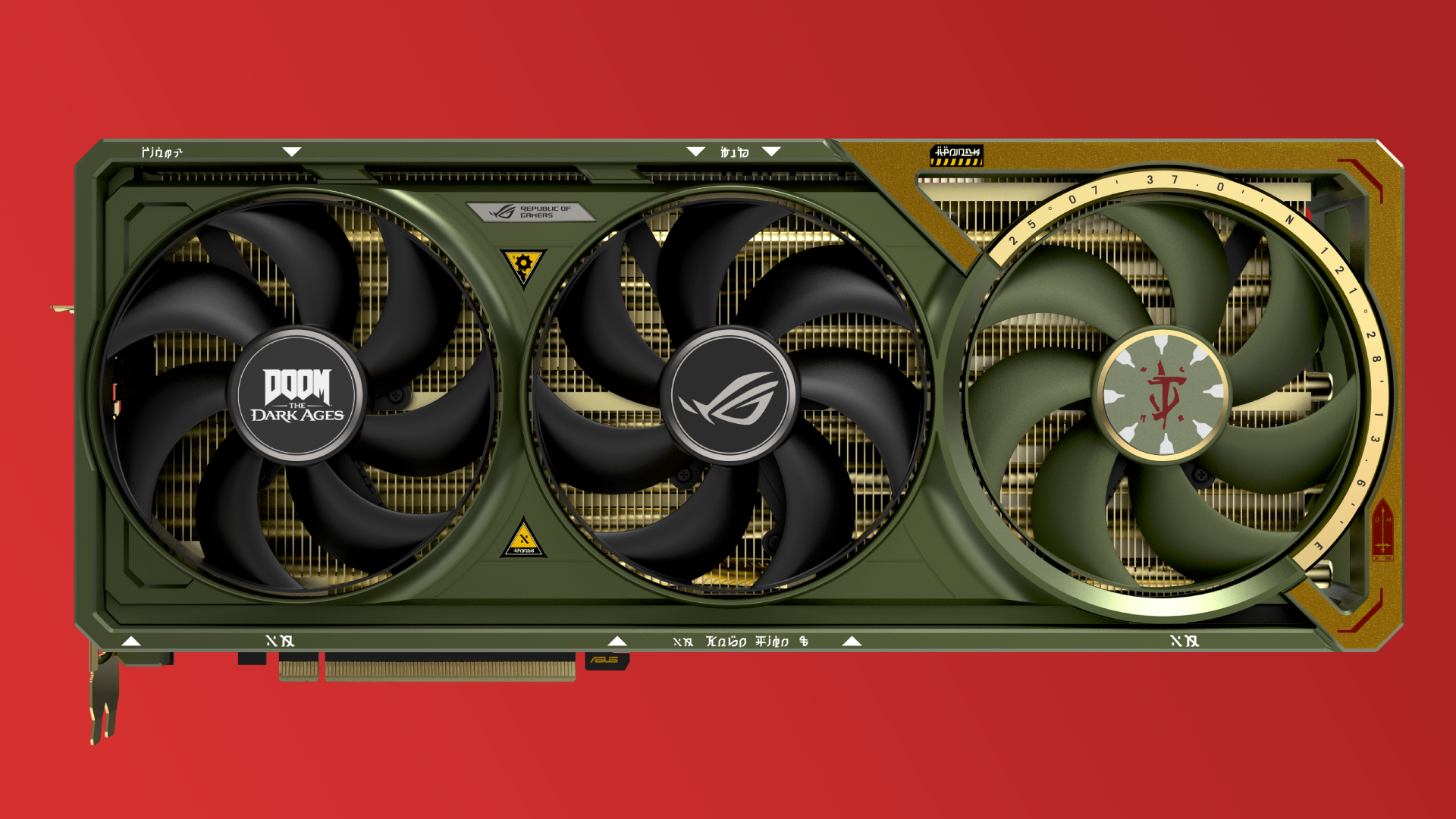

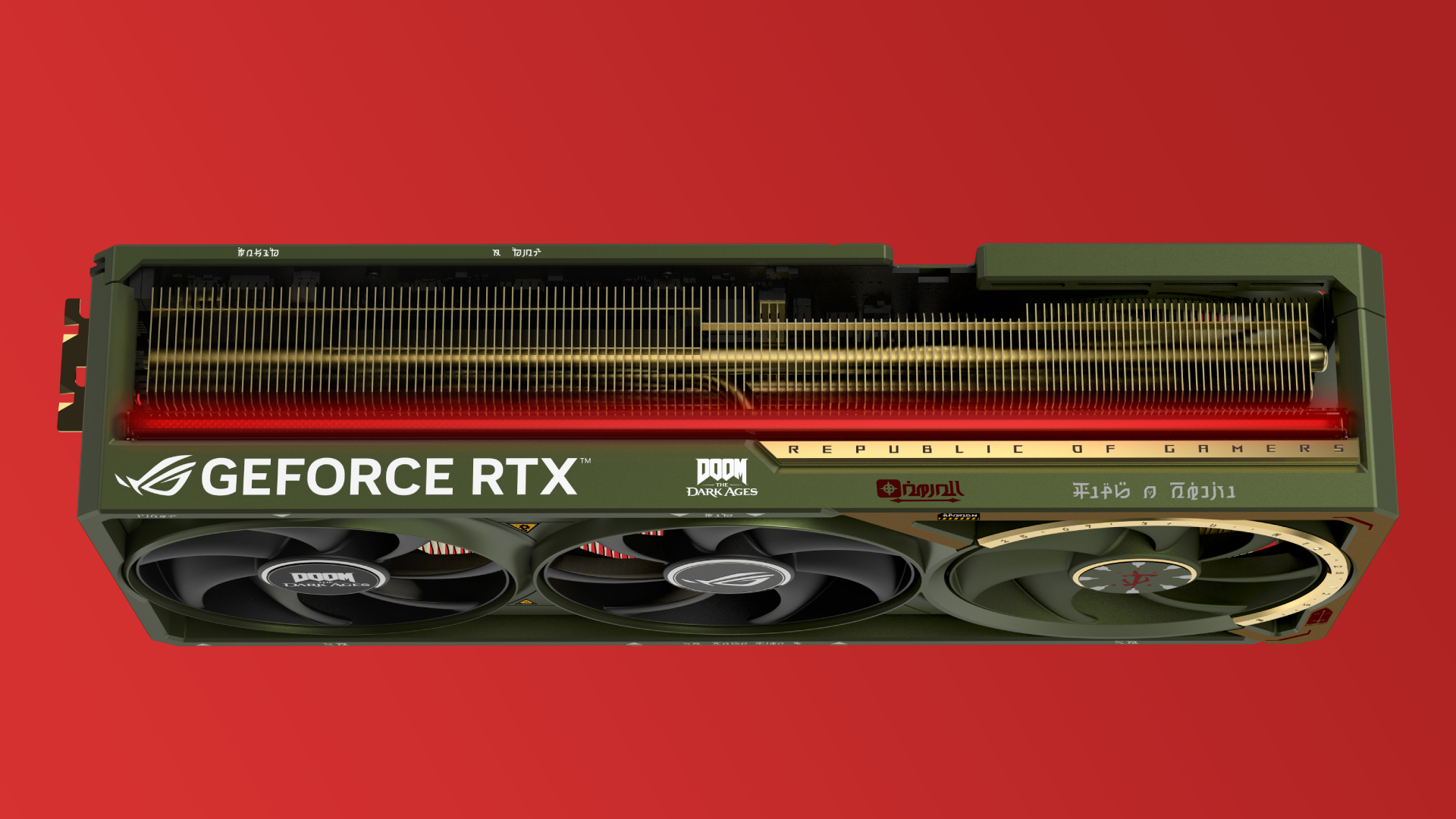

I'm not normally one for game-themed graphics cards, but in this case I'll make an exception. Asus has announced the ROG Astral GeForce RTX 5080 OC Doom Edition graphics card, just in time for the launch of Doom: The Dark Ages on May 13, and I reckon it's a bit of a looker.

Asus has partnered with Bethesda and id Software to celebrate not just the "legendary tenure of Doom" but also the 30-year anniversary of Asus designing and manufacturing graphics cards.

Oh, and apparently it represents Nvidia's longstanding reputation as the world's premier GPU designer, too. A trio of celebrations, then, although I'm less concerned about what it represents and more about how good an Asus ROG Astral card looks in some non-standard colours.

Asus says it'll be sold exclusively on the Bethesda Doom Gear store, and you'll also get a mouse mat, a t-shirt, a yellow keycard (!) and an exclusive Doom Slayer Legionary in-game skin. The listing's not up on the store page yet, but pre-orders are said to be starting shortly, although I'd imagine it won't be cheap.

Not that any RTX 5080 is at this point, but still. A Doom-themed RTX 50-series GPU and a cool yellow keycard? Yep, that'll be all the monies, I reckon. Still, at least you can actually buy one, unlike the Starfield-themed RX 7800 XT of yore, which was only available as a giveaway.

It's a ROG Astral edition card, which means you get the full benefit of all of Asus' cooling-related enhancements. This includes four Axial-tech fans, a patented vapour chamber design, a phase-change thermal pad, and what Asus says is a "vast fin array" that helps provide "effortless cooling in the heat of battle."

Not that the RTX 5080 is known for being a particularly hot-running card, even with a significant overclock on the OC-ed Founders Edition. Our hardware overlord, Dave James, managed to push the chip in our FE sample to 500+ MHz with very little effort (or any scary temperature leaps), but the Asus ROG Astral cards seem better equipped than most to deal with any "hotter than hell" overclocking moments.

Speaking of Asus overbuilding GPUs, it's also been revealed the ROG Astral lineup has some hidden hardware. Users have noticed that the included GPU Tweak III software has an Equipment Installation Check feature that uses an onboard gyroscopic measurement sensor to tell if the card is sagging in its slot, and provide a warning if it's off-kilter by a certain amount.

Yep, I couldn't quite believe it either. The ROG Astral lineup comes with an anti-sag device by default (as does the Asus TUF Gaming RTX 5070 Ti OC Edition I reviewed earlier this month, which comes with a weeny little GPU post of its own), but I'm mightily impressed that there's an actual sensor on board to tell if your GPU is on the droop.

The Slayer himself would be proud. After all, if you're going into battle with the demons of hell, it's probably best to make sure all your gear is in tip-top shape, isn't it?

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Or it could have 64 lollipops, 16 ice creams, and 64 jelly sweets (via Videocardz). The formatting here could be clearer, but those numbers would line up with the top GPU in the RX 9000-series desktop stack, the RX 9070 XT. That's quite the performant graphics cruncher, although what wattage this mobile variant might run at is still up for debate.

???9??0??8??0??M 6??4?? 1??6?? 6??4??9??0??7??0??M XT 4??8?? 1??2?? 4??8??9??0??7??0??M 3??2?? 8?? 3??2??9??0??7??0??S 3??2?? 8?? 3??2??9??0??6??0??M 2??8?? 8?? 3??2??9??0??6??0??S 2??8?? 8?? 3??2??April 25, 2025

Underneath that is the rumoured RX 9070M, said to have 48 CUs, 12 GB of VRAM, and 48 MB of L3 cache, which would match the specs of the China-only (for now, at least) RX 9070 GRE, which has now turned up for pre-orders on the AMD website after leaks of its existence came out last week.

After that comes the RX 9070M and RX 9060M, lower spec 8 GB GPUs expected to use the Navi 44 GPU, with reported "S" variants. That's presumably indicative of lower-wattage models, similar to Nvidia's Max-Q mobile GPUs of old.

Should these rumoured mobile GPUs be on their way in the near future, it'd seem likely that AMD's recently-announced press conference at Computex 2025 on May 21 would be the time and place to unveil them, although that's all speculation for now. Still, the event promises the announcement of "key products and technology advancements across gaming, AI PC, and enterprise", so it seems a fair bet we'll get some more concrete details then.

Or not, if AMD's CES 2025 RX 9000-series announcement is any indicator. We were briefed on some of the specs of the new desktop cards beforehand, but the presentation itself amounted to a minimal amount of info.

I'll be at Computex in person again this year, so it'll be interesting to see if the new mobile GPUs make a full appearance among all the inevitable AI feature announcements I've come to love so dearly.

It's also interesting to see the RX 9080 nomenclature rolled out for a mobile GPU, of all things. I'm not expecting AMD to announce an RX 9080 desktop card (after AMD graphics chief Jack Huyhn made it very clear that this generation was aiming for the mid-range), but stranger things have happened.

As for whether we'll see the new mobile chips in many gaming laptops? That's unclear, too. The RX 9000-series desktop cards have been popular, so it's reasonable to think that AMD will be hoping some of that shine will rub off on the mobile variants—although whether laptop vendors adopt AMD GPUs en masse remains to be seen.

And what of the RX 9060 desktop cards? AMD confirmed that multiple "RX 9060 products" would be arriving in the second quarter of this year, which would suggest we might see an entry-level desktop GPU at Computex, too. Something to give the RX 5060 and RX 5060 Ti some serious competition at the budget end of the market? I'm hoping so, at the very least.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Videocardz reckons the new GRE variant will only be available in China at first, but we'll have to wait for the launch to be sure where exactly it will go on sale.

In the meantime, the RX 9070 GRE is based on the same AMD Navi 48 chip as the RX 9070 and RX 9070 XT, but with some elements disabled. It's rumoured to run 3,072 Stream Processor cores. That compares with 3,584 for the vanilla 9070 and 4,096 for the 9070 XT.

The GRE is also expected to sport a 192-bit memory bus and 12 GB of GDDR6, both of which are a step down from the 256-bit bus and 16 GB of the other 9070 variants.

Consequently, the 9070 GRE ought to be significantly cheaper than the 9070 and 9070 XT, which have MSRPs of $549 and $599, respectively. Of course, you'd do well to buy either card at MSRP currently. It's tricky enough to grab one at all.

So, exactly where the RX 9070 GRE will land in terms of real-world pricing is just about anyone's guess. But it could be priced to take on the new Nvidia RTX 5060 TI, which is MSRP'ed at $379 for the 8 GB version and $429 for the 16 GB option.

You'd expect the 7090 GRE to have the edge over those cards for pure raster performance, but perhaps not for ray-tracing. Path tracing is arguably too big an ask for any card at this price point.

But Nvidia's typically strong feature set, including the ML upgrades that come with its latest DLSS 4 upscaler, not to mention multi-frame generation, means that the choice is rarely as simple as comparing basic raster performance.

Of course, the GRE may end up a China-only GPU, in which case the comparison will be moot in most markets. But we suspect it will make it to global markets at some point.

For now, there's no word on an official launch date. But with product images leaking, it probably won't be too far away.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

What I will say is this is born of my simply booting into the game, slapping each and every slider, from view distance to hair quality, up to Ultra—including Hardware Lumen RT, of course—but to get a vaguely comfortable frame rate I've had to enable both DLSS and Frame Generation. Obviously because I'm an Nvidia apologist.

Still, I was surprised to only be knocking on the door of 60 fps once I'd escaped the stinky Picard-infested sewers and emerged into wider Cyrodiil. This is the most powerful graphics card of today, running only in a pseudo 4K mode because I'm upscaling and actually just running the game at a far lower res.

I honestly wasn't expecting that Oblivion Remastered would be a game that actually necessitated Frame Generation to get the sort of frame rates I've become accustomed to with the RTX 5090. But it is 2025 and it seems like every new game is coming with the stipulation that upscaling and now frame gen are almost requisite for the top settings.

Stick standard 2x Frame Generation on and I can relax, knowing that I'm getting triple digit frame rates. Although Nvidia's own Frameview and overlay monitoring software don't seem to read the generated frames, claiming that I was still languishing around the 60 - 70 fps mark. That had me initially thinking that FG was giving me practically nothing, and had me rather concerned for the thousands of dollars worth of GPU silicon struggling away under the Elder Scrolls load.

Thankfully both Rivatuner and Oblivion Remastered's own fps monitors were showing the same higher frame rate, which seems more redolent of the actual performance you would expect from adding in some extra frame smoothing goodness. Though it is worth noting that Rivatuner can be a bit funky when it comes to the 1% Low metrics.

I am able to push that overall frame rate up to around the 170 - 200 fps mark using the DLSS Override feature of the Nvidia App and enabling 4x Multi Frame Gen—and it so far looks 辞办补测—but absolutely having to use RTX Blackwell's one neat trick to get higher frame rates and consistently nail a triple digit frame rate is really something.

Without upscaling or Frame Generation, I'm back down to a native 4K experience of around the 50 fps mark, and even with DLSS enabled I'm often dropping down below 60 fps in the outdoors areas.

That obviously changes indoors, with a far more limited view distance and the game engine having to do far less graphically intensive work. With 4x MFG I'm suddenly hitting a heady 200+ fps in those dark corridors.

But still, it's interesting to me that when you go cavalier with the in-game graphics settings in Oblivion Remastered that even the RTX 5090 will struggle. Thankfully, it's still a PC game with the bones of OG Oblivion at its heart, so there are myriad ways to bump up the frame rate, whether that's purely by stepping back the overall settings a touch, or being more aggressive in the upscaling level you're aiming for. And if you've somehow managed to bag yourself an RTX 50-series GPU you get to use Frame Generation in all its forms, too.

You could even do what I did the first time I played the original Oblivion back in 2006 and just run it in a tiny window on your 14-inch CRT monitor, y'know, just to squeeze out a playable frame rate. My old GeForce 6600 LE really did not cope with that game…

]]>Appearing alongside the usual suspects, such as Nvidia CEO Jensen Huang giving a keynote just before the main event on May 19, AMD refuses to be overshadowed. AMD announced its own Computex press conference for May 21, at 11 am UTC+8 as both an in-person and streamed event.

Details about what will be discussed are expectedly thin. What we know so far is that Computing and Graphics Group Jack Huynh, plus guests, will take to the stage to discuss "how AMD is expanding its leadership across gaming, workstations, and AI PCs." As vague as that is, it could be a pretty exciting stageside chat as a little sleuthing from our Jacob suggests we'll also hear more about AMD's affordable RX 9060 cards.

Last we heard, the RX 9060 cards would arrive some time between April and June. With the slightly delayed launch of AMD's RX 9070 and RX 9070 XT cards, it makes sense that perhaps AMD's plans for the RX 9060 cards have also been pushed back. That means a more detailed announcement in May would still vaguely line up ahead of a potential, properly-summer release.

As a perpetually chilly Brit, that would mean I have to survive England's seemingly never-ending false-spring long enough to get into actual-spring, but let's set aside my temperature-based trials and tribulations. Besides unveiling a few more affordable graphics card options for the cost conscious, AMD could also turn heads with a deeper dive into its Ryzen Z2 APU pitched for future handheld gaming PCs.

At any rate, it's definitely not unexpected that AMD would mention gaming and AI in the same breath—for one, Nvidia made bank on AI in its last financial year, and for another, the company's DLSS 4 deployment has made a Nvidia turncoat of our Andy.

Perhaps AMD is looking to regain some ground in the not-too-distant future. How will they do that, exactly? Well, you'll have to watch a very particular space; be sure to tune into AMD's press conference livestream at 8 pm PT/11 pm ET on May 20.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

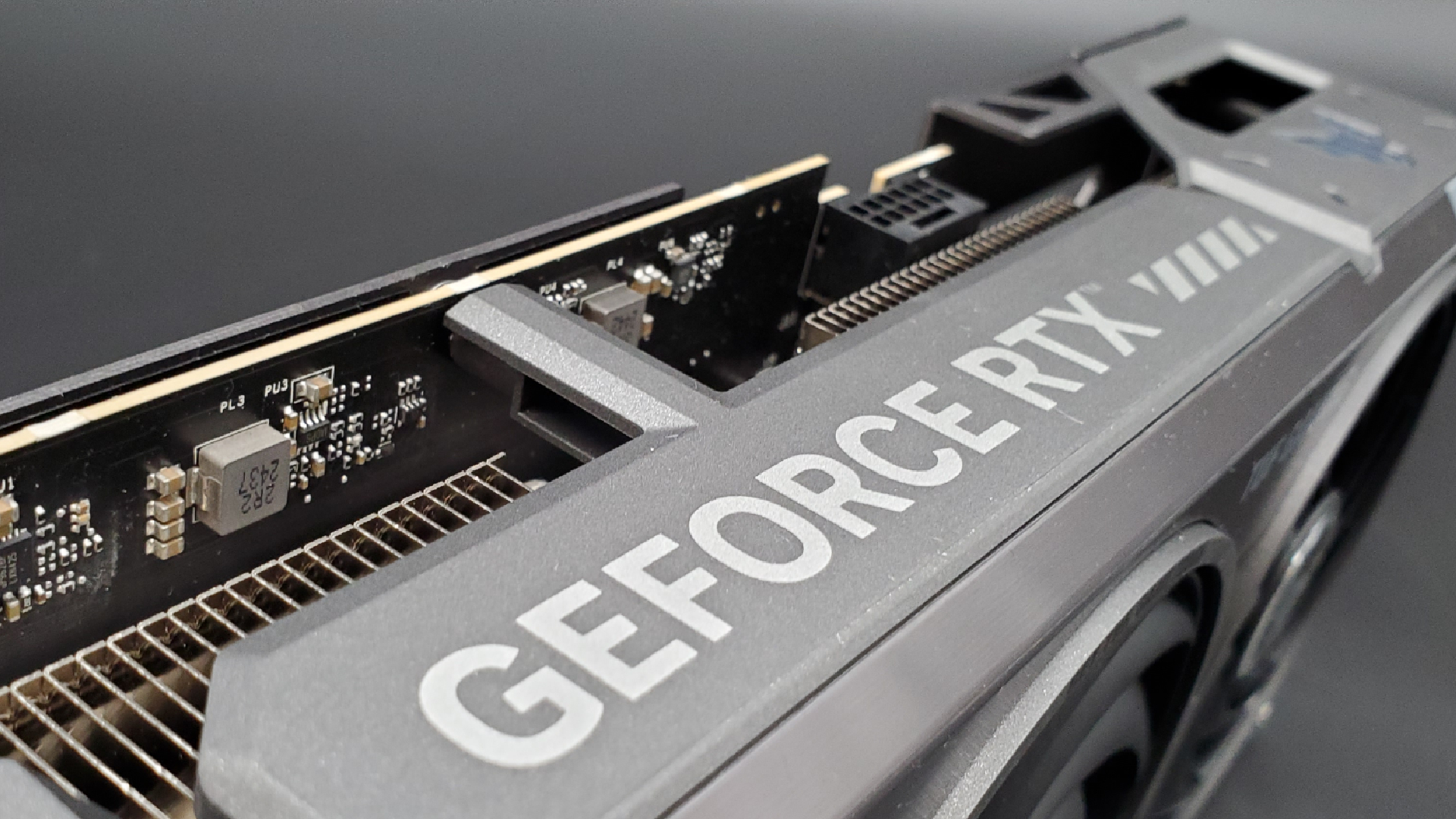

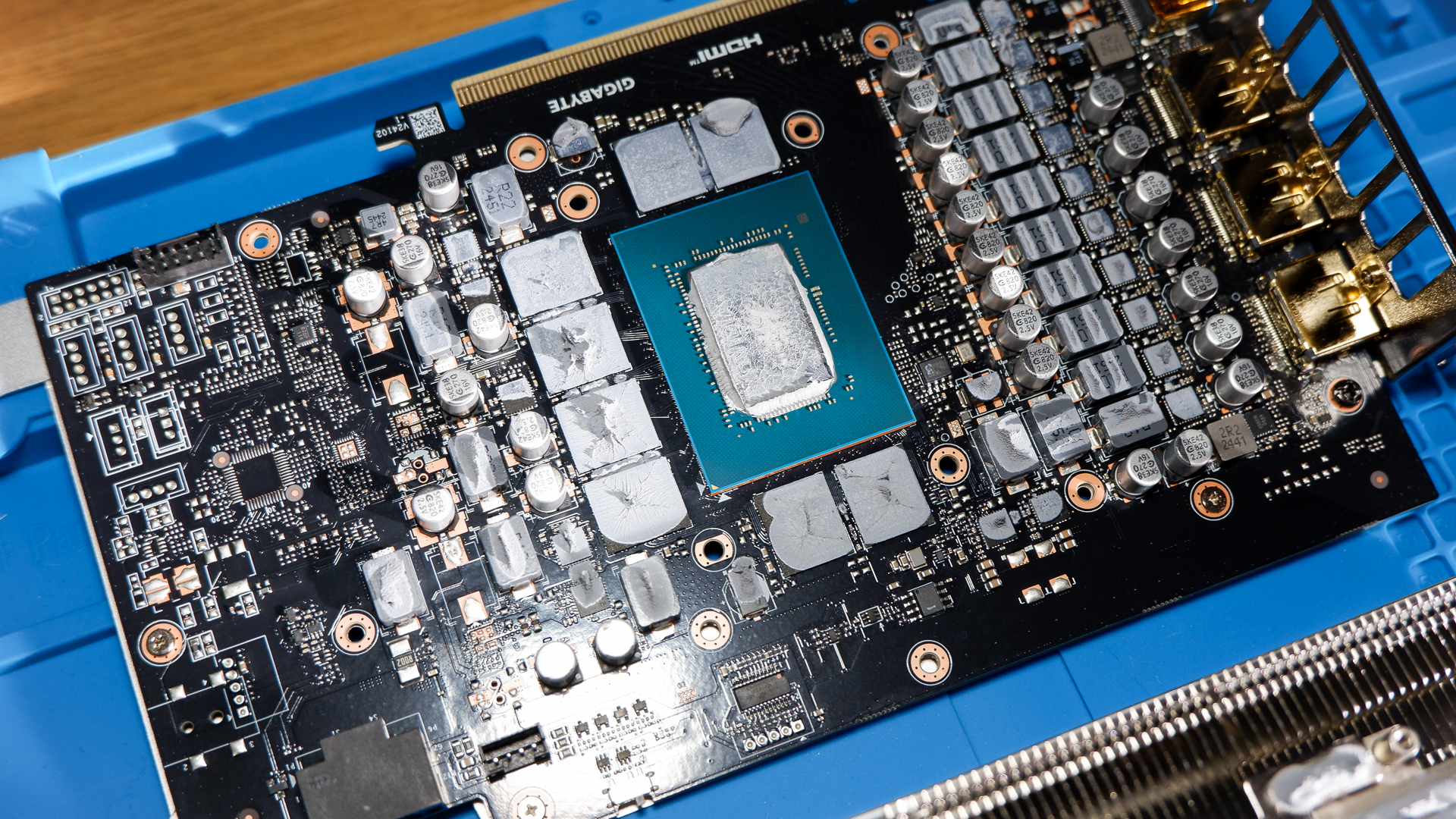

These hotspots seem to essentially and most directly be caused by power delivery components being placed too close together on the PCBs and connected via too few traces, although it seems that at least some of this density could in part be a result of an oversight in an Nvidia guide for board partners.

That's because, according to Igor, who references an RTX 40-series Thermal Design Guide due to an NDA looming over the one for RTX 50-series ones, the guide is "far too imprecise and incomplete", especially concerning the specific area where the hotspots are occurring.

Although the two cards in focus are the Palit GeForce RTX 5080 Gaming Pro OC and PNY GeForce RTX 5070 OC, Igor claims the hotspot can be seen on many more cards than these. It can apparently be seen in the same spot on "cards from major board partners such as Palit, PNY and MSI as well as variants from other manufacturers."

This can even occur on "cards that at first glance appear to be uncritical in terms of performance, such as an RTX 5060 Ti" because "even cards with a peak power of just 180 watts can be affected if the topology is concentrated on just a few supply phases and the problem persists due to the concentration."

So what's the issue exactly? Essentially—and to simplify something that Igor explains in much more detail—these 50-series PCBs seem to be designed in such a way that the densely packed VRMs concentrate the heat generated by the high current flows into a small area. It's not just about having too few VRMs, either, as it's especially pronounced where the vias (vertical connections through the PCB) pass through the copper power planes. They're closely routed together, resulting in a lack of vertical heat dissipation.

This leads to the hotspots that Igor identified. In the RTX 5080 he tested, the temperature in this spot reached over 80 °C and in the RTX 5070 he tested, it reached over 107 °C. While these temperatures might not cause immediate instability, the problem is that this might not be good for long-term stability. Igor says:

"Current-carrying paths are often routed up to the load limit of the thermal and electrical specification, with no additional leeway for ageing, manufacturing variation or load peaks. This results in structures that function reliably under ideal conditions but react sensitively to minor deviations in cooling, placement or load distribution."

The key phrase here is "under ideal conditions", and therein lies the possible criticism of Nvidia's Thermal Design Guide and one possible root cause of this hotspot problem.

The Thermal Design Guide is what Nvidia gives out to AIB partners, cooler developers, and so on, to set the standard(s) to compare different components' thermal planning against. According to Igor, using the RTX 4090's Thermal Design Guide as an example, there are some problems with Nvidia's guide or the AIB partners' use of it.

The overarching problem is that "many of the parameters are idealized assumptions that are relativized in practical implementation by various influencing factors." In other words, its standards don't seem to be applicable to real-world use, at least not without risking thermal issues. In fact, he presses this point home in regards to the hotspot issue he identified, saying: "It is remarkable that this particular area is not highlighted as a critical area in its own right in the official Thermal Design Guide."

Igor also goes on to demonstrate that it wouldn't take much to solve the issue. All one needs to do is create a "thermal relief" (a thermal pad) on the back of the graphics card where the hotspot is located, to help dissipate the heat.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

This kind of solution is something that cooler manufacturers for these graphics cards could have presumably implemented had they known about the problem. Which is something that, also presumably, the Thermal Design Guide could and perhaps should have alerted them to.

As with most things in the industry, though, root causes are rarely simple to identify. Igor points out from the start that there are multiple kinds of actor involved in the production of a graphics card, and it might in fact be the poor communication and collaboration between different actors that can lead to such issues:

"Layout managers at board partners are often directly dependent on external PCB suppliers and have to adapt their designs to cooler solutions, which in turn are created under separate design and manufacturing premises. The lack of interoperation between these development lines then leads to design compromises which—as in the present case—can have unfavorable thermal effects without one of the parties involved being able to fully oversee this in isolation."

Too many cooks can spoil a broth, perhaps?

Whatever the case, I wouldn't worry too much about hotspots on your RTX 50-series GPUs just yet. These kinds of temperatures shouldn't cause short-term issues; the concern is more that GPU lifespans could be shortened over the course of a few years.

This isn't great, of course, but you shouldn't have to worry about your graphics card melting because of one of these hotspots any time soon. I'd probably be more concerned about those power cables…

]]>X user Haze2K1 (via Videocardz) spotted a listing for an Intel BMG-31 GPU in a manifest on shipping data website NBD. Intel's latest Arc B580 and B570 GPUs are based on the BMG-G21 chip, while the G31 GPU has long been rumoured as the next step up in the Battlemage hierarchy.

Previous leaks have indicated the G31 GPU packs in 32 execution units or EUs. That compares with the 20 EUs of the existing Intel Arc B580. In really rough terms, then, G31 should be about 50% faster and roughly competitive with an Nvidia RTX 5070 or an AMD Radeon RX 9070.

The catch here is that the mere existence of an Intel graphics cards with a G31 GPU being shipped across the world does not prove that Intel plans to launch a retail product. Another rumour from around three weeks ago suggested that the G31 chip as a retail product was cancelled late last year.

Likewise, the shipping manifest lists the card as an "R&D" or research and development item. Of course, that's exactly what a pre-launch card would be listed as even if Intel was indeed planning on launching a retail G31-based graphics card, perhaps branded as Intel Arc B770.

If anything, the main reason to doubt that Intel is actually planning on pushing through a G31 graphics card into the retail market is that the launch window for a competitive offering is narrowing. The longer Intel leaves it following the release of the Nvidia and AMD competition, the less of an impact G31 is likely to make.

Arguably, G31 would have to launch sometime in 2025 to make any sense at all, given you would expect Nvidia and AMD to release their own follow ups in the $500 GPU space towards the end of 2026 or early in 2027.

All that said, we'd welcome a G31-based gaming graphics card at almost any time, provided it's priced right. Intel's B580 board has several very promising attributes, including strong ray-tracing performance, and Intel's XeSS upscaling technology is pretty decent, too.

Given how aggressively Intel has priced the B580 and B570, you'd also expect an G31 board to undercut the likes of the RTX 5070 pretty comprehensively, raising the prospect of a card with performance that's roughly competitive with an RTX 5070 for around the $400 mark. Anywho, we'll have to wait and see with all our fingers and toes crossed to see if Intel does ever wheel out a G31-based GPU and if it does, just how fast it is and how well it performs.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

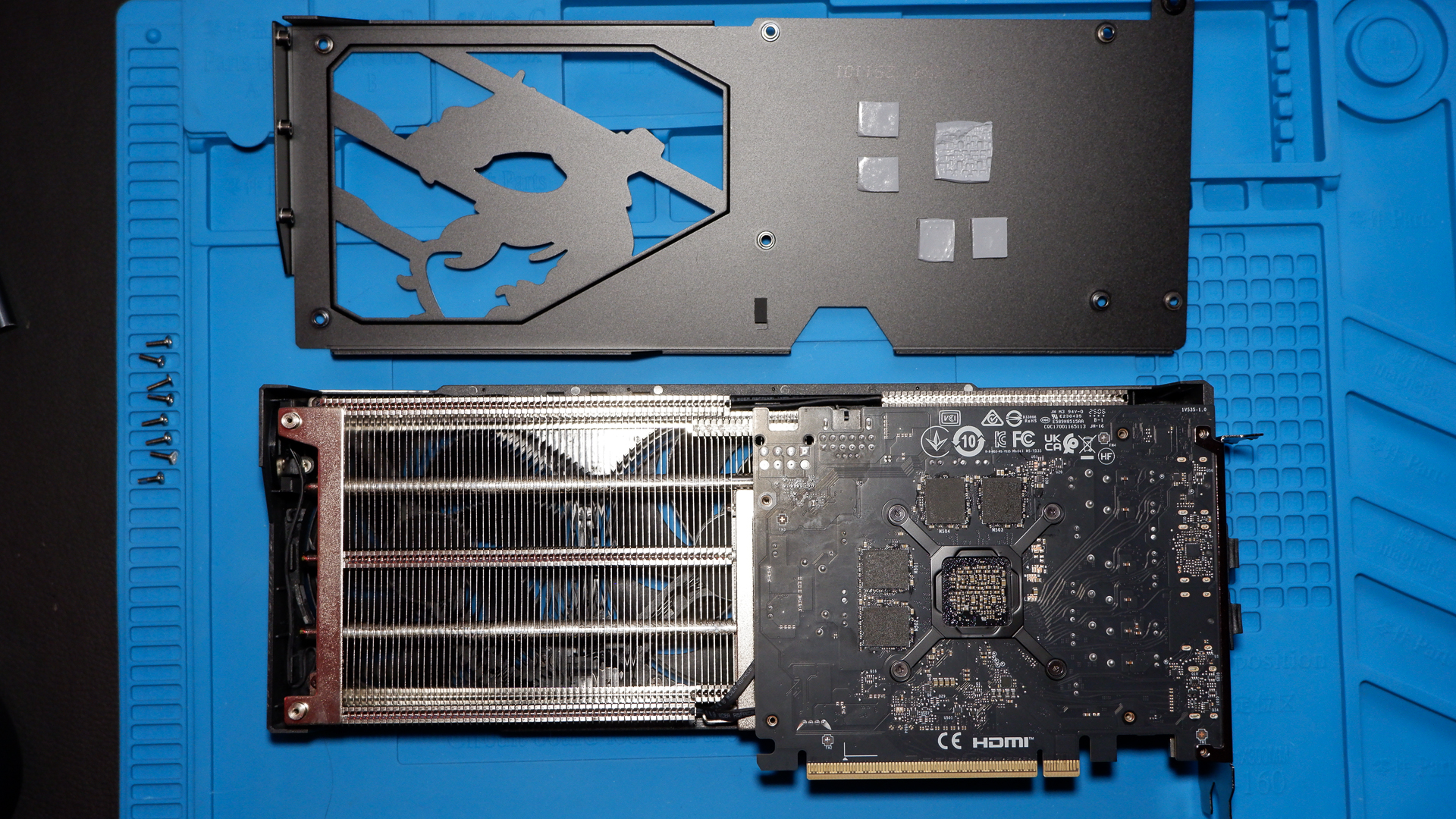

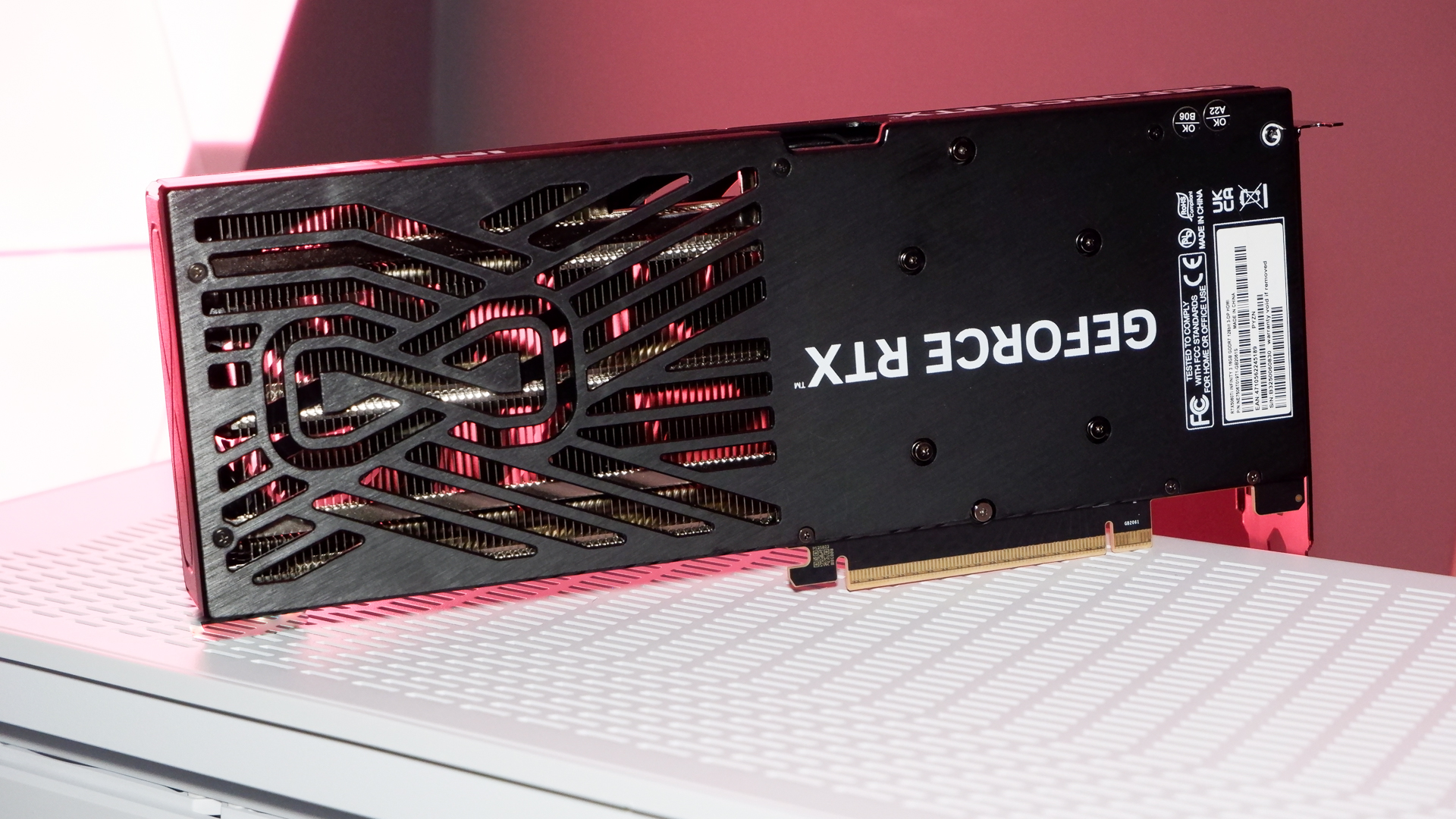

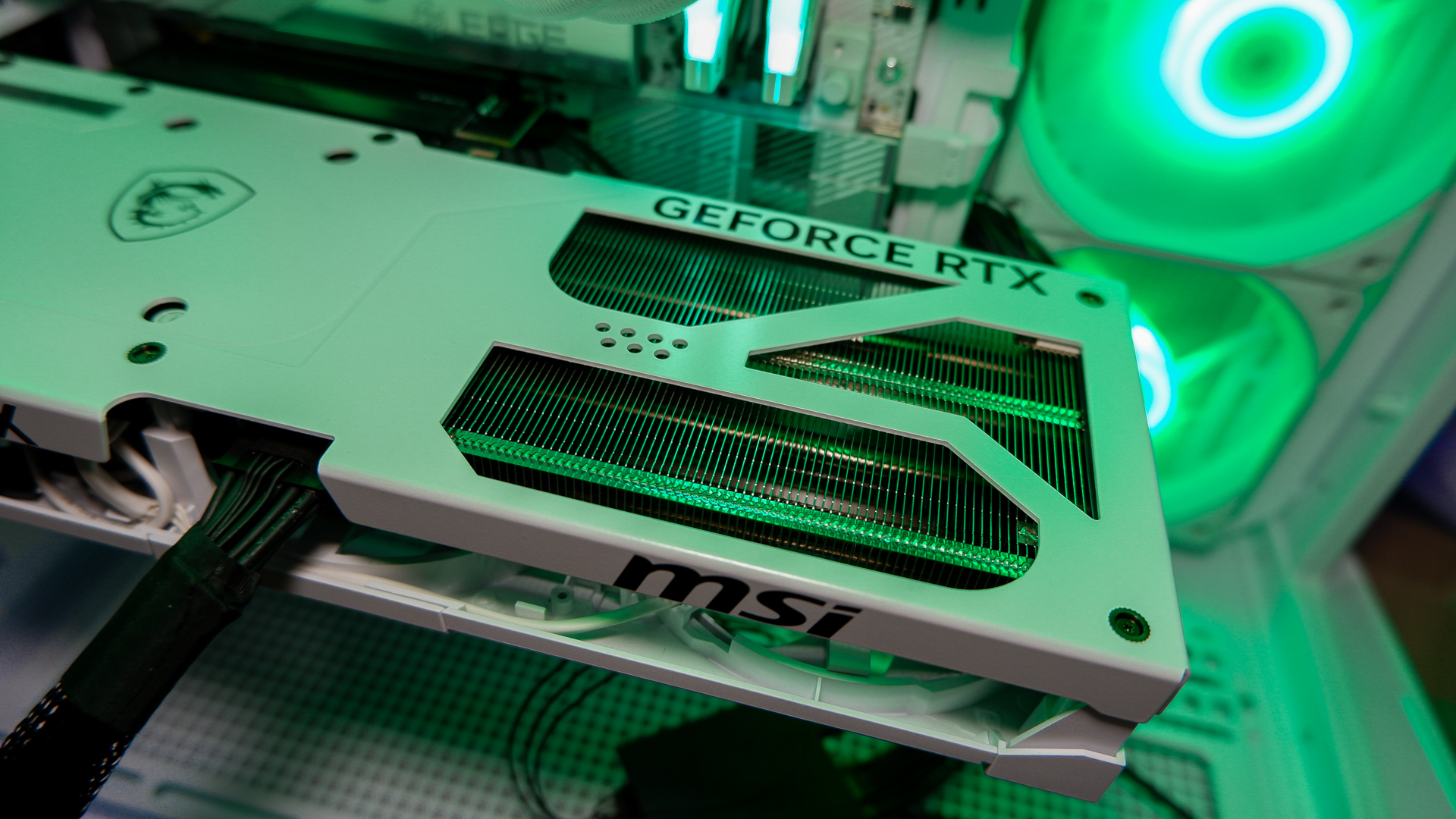

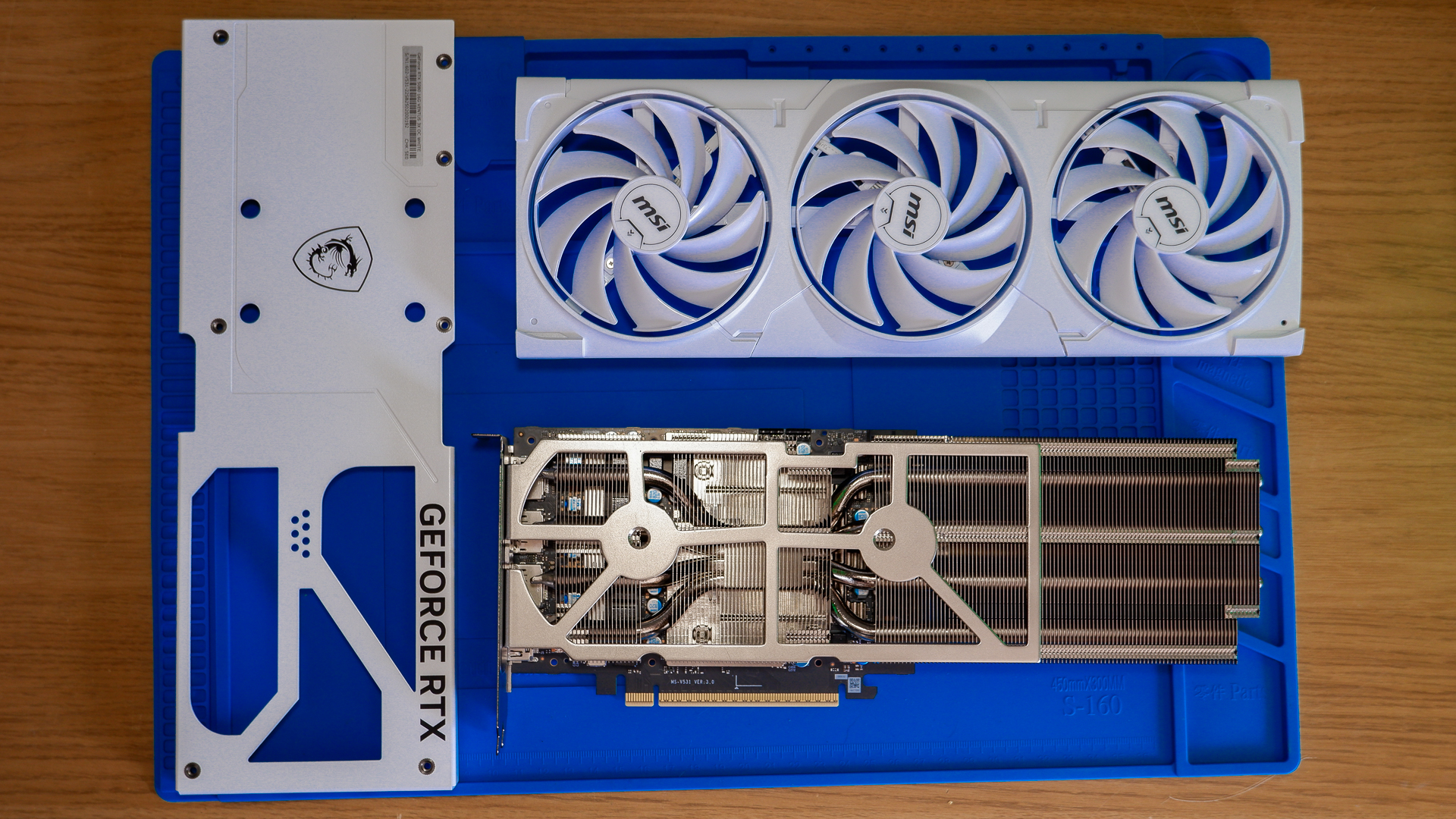

Some care and attention have been paid to the design of this triple-fan graphics card. That's clear to me as soon as I remove it from its crinkly packaging and spot the dragon motif cut away from its metal backplate. That's not the sort of flare you'll find on either MSRP card from PNY or Palit, with the latter actually lacking a metal backplate altogether.

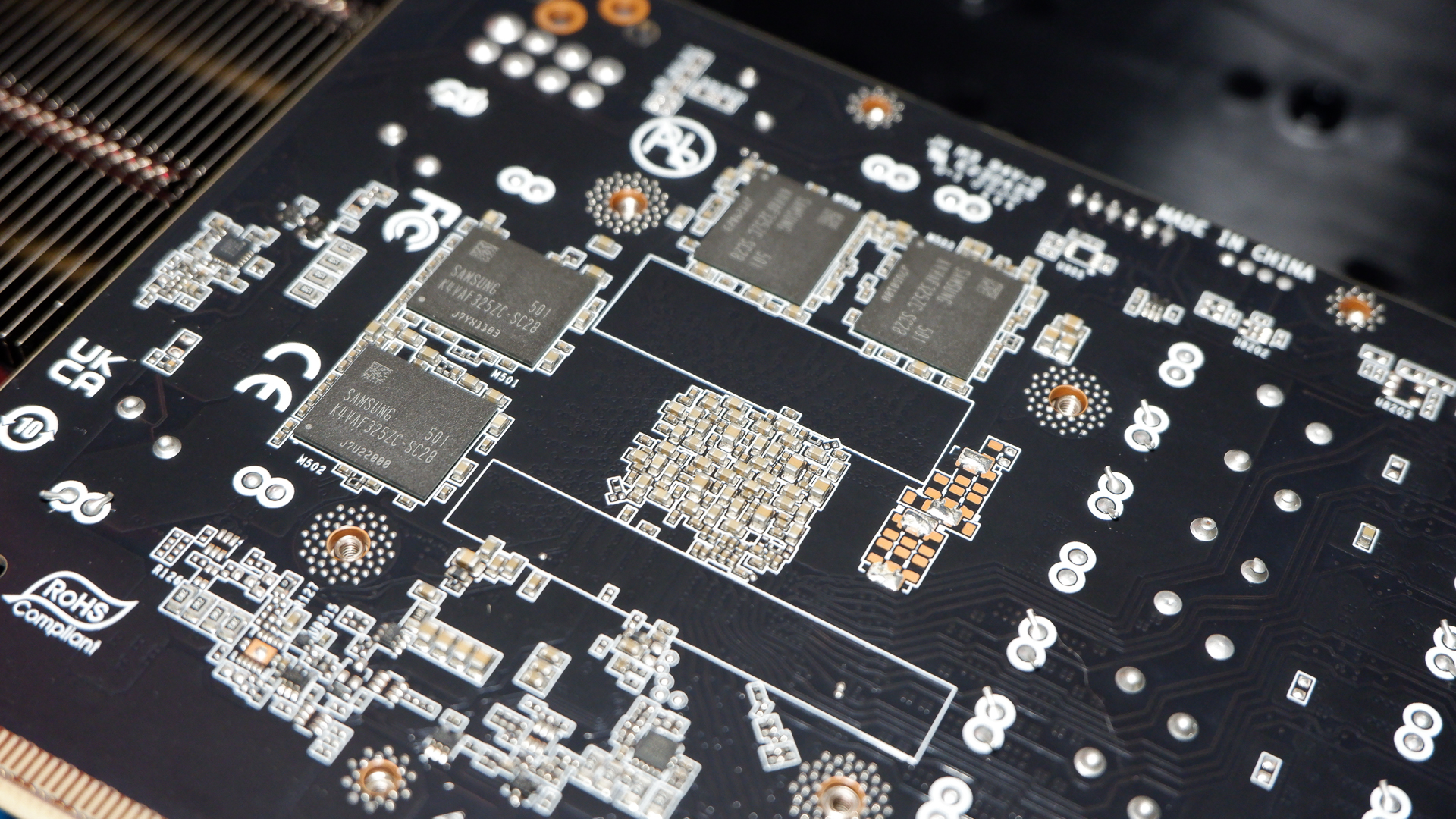

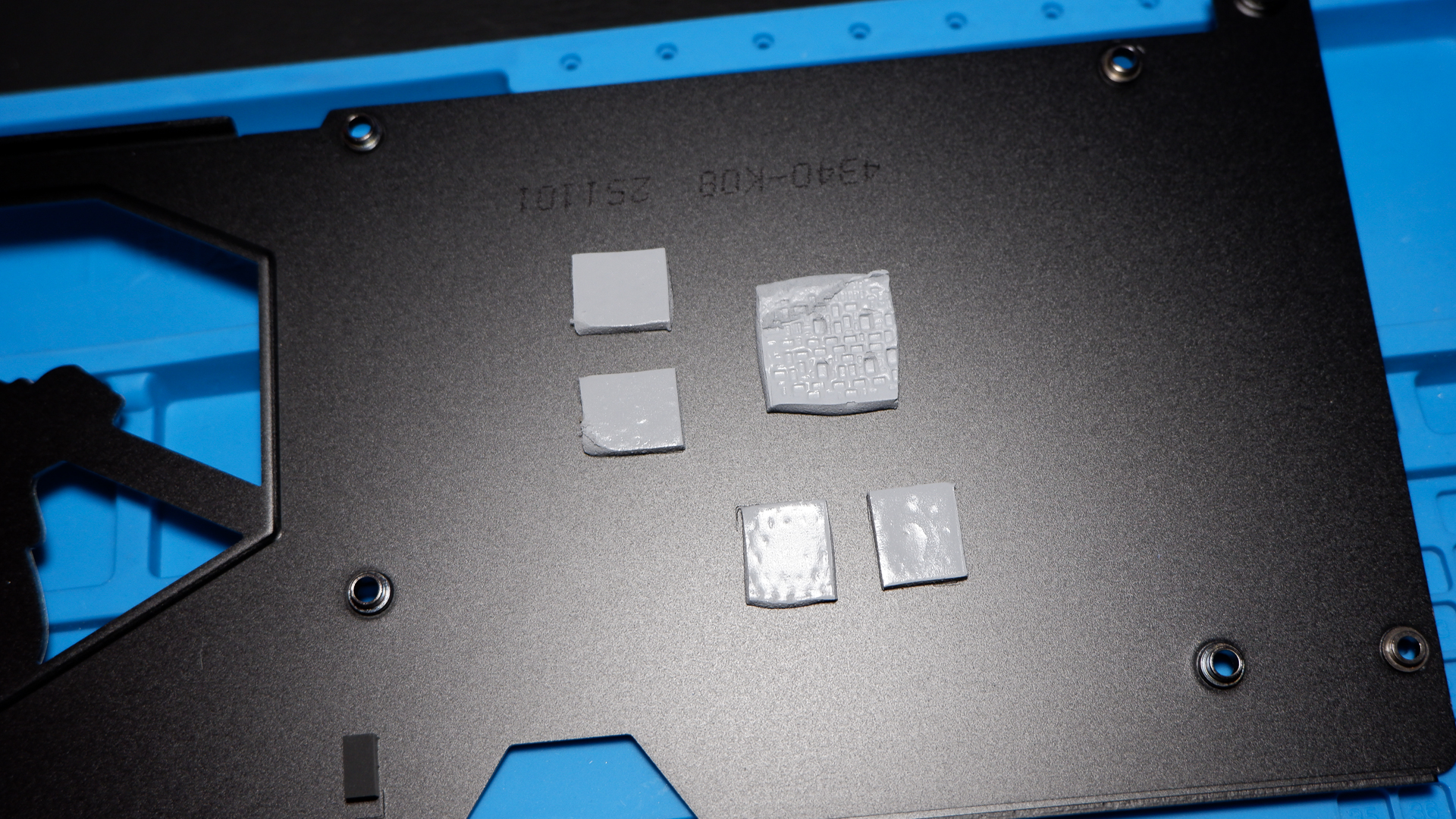

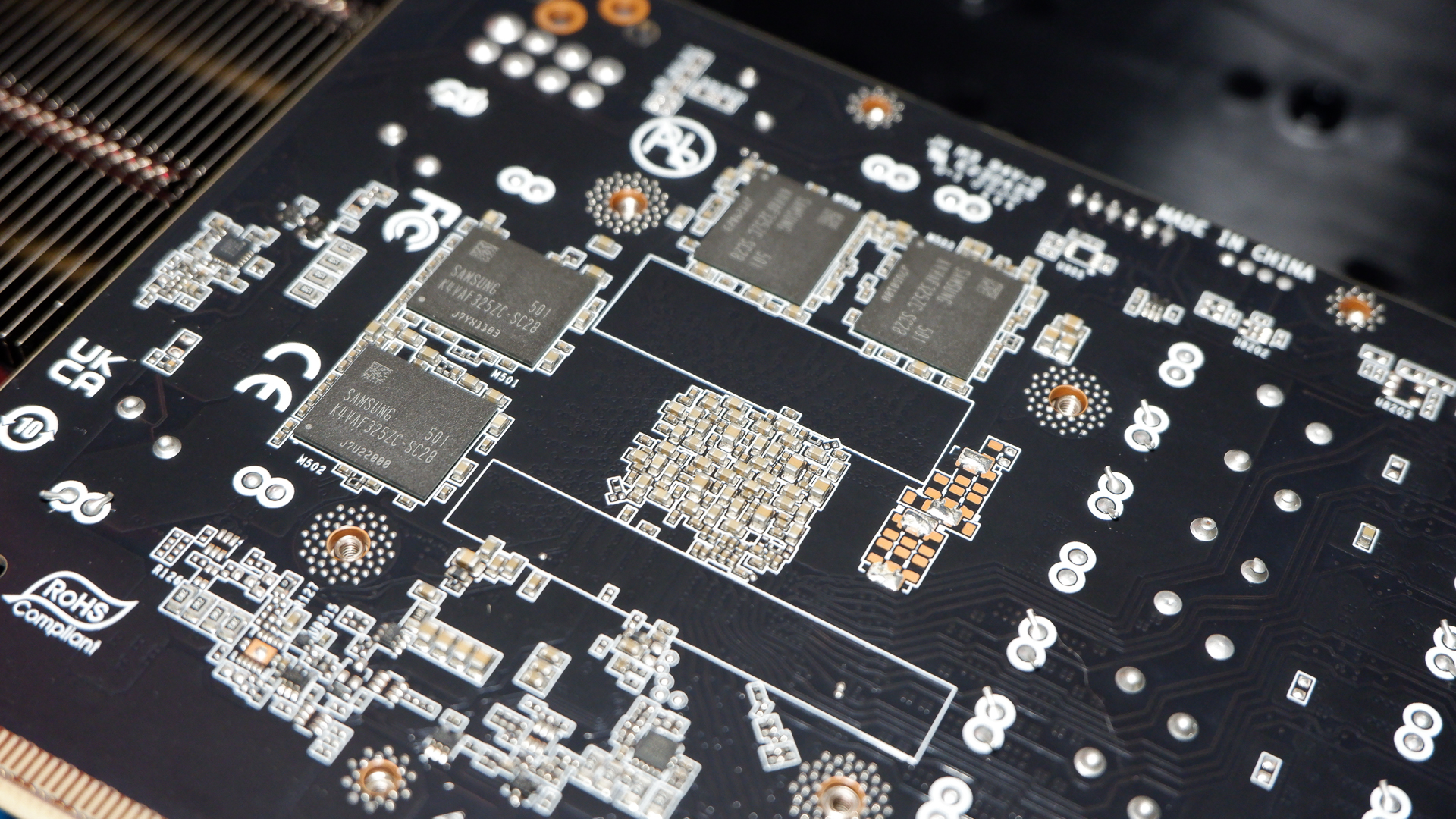

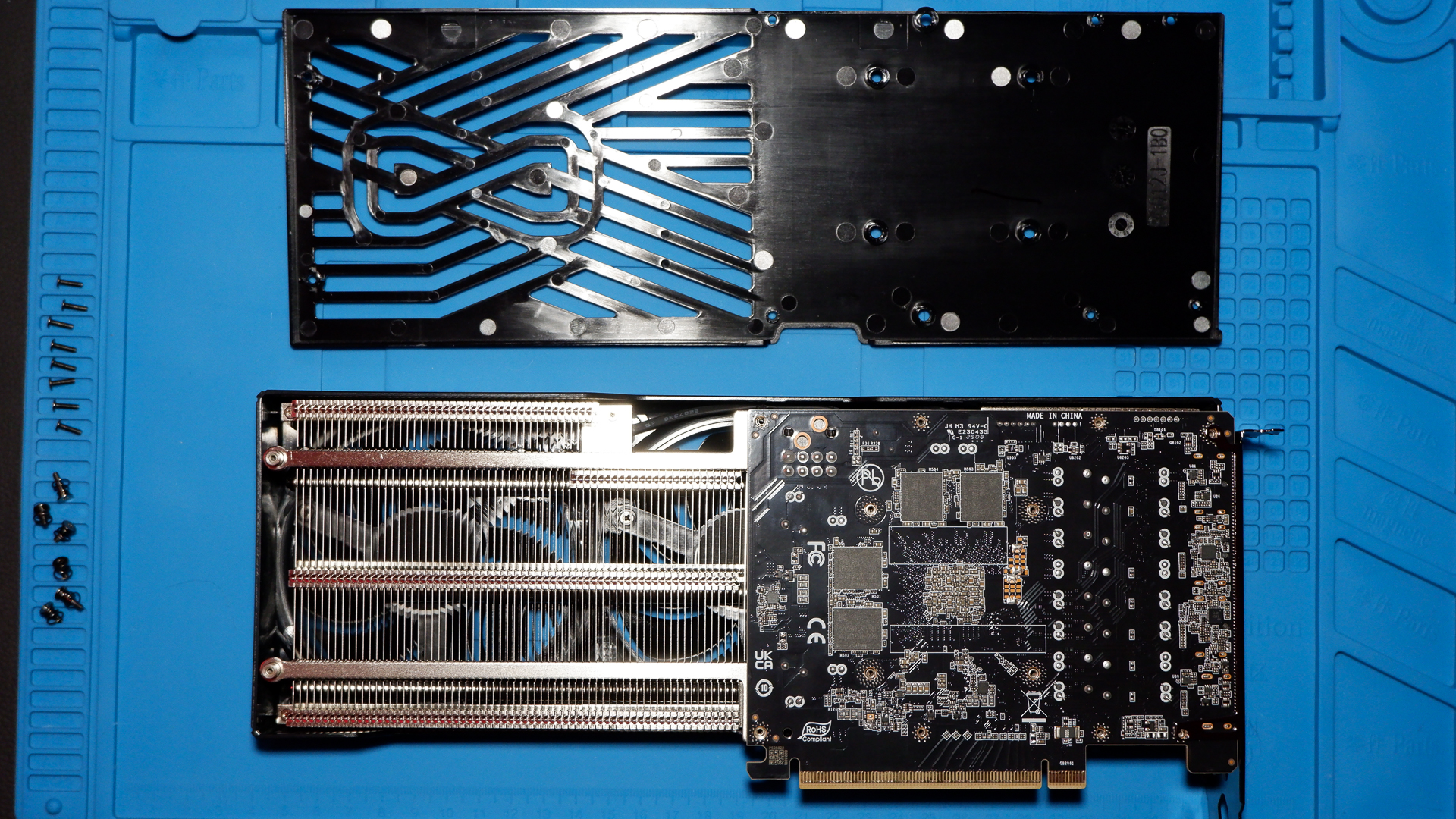

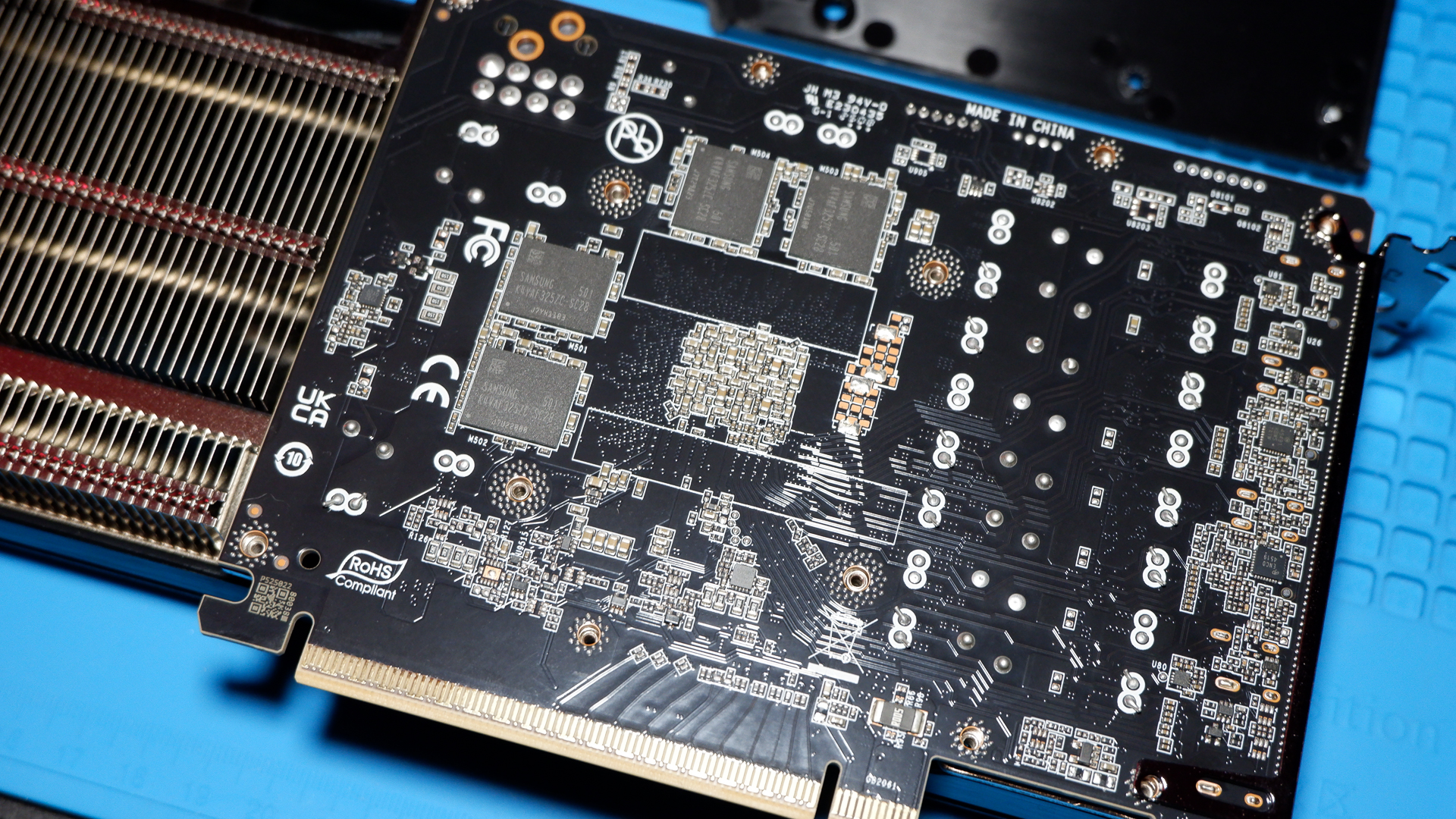

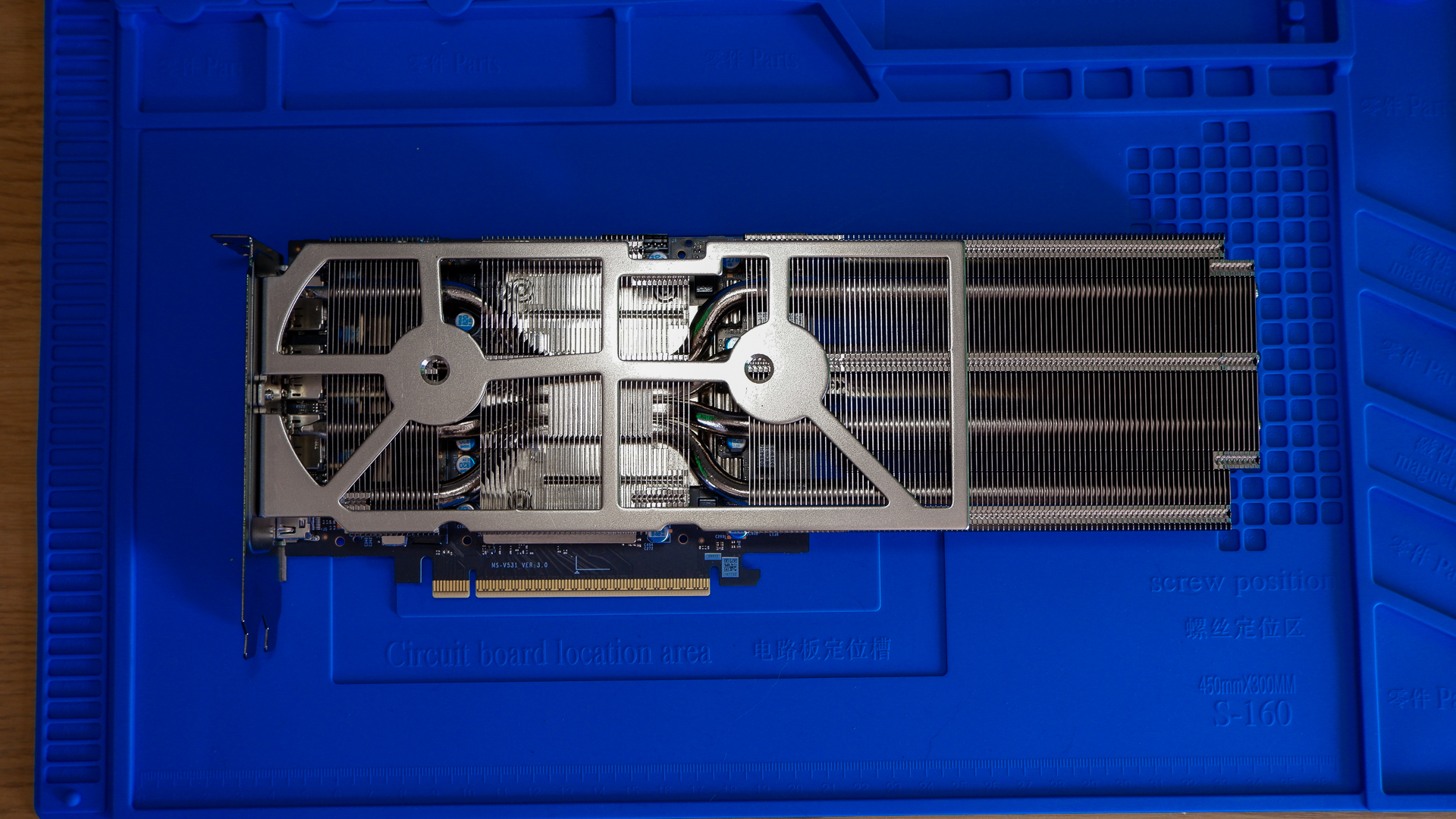

Metal, plastic, who cares? For this graphics card, it actually does matter. The backplate has a few benefits on the Gaming Trio beyond looking good. First off, it offers some structural rigidity to help prevent any sag on the card, but more importantly it offers a way to help cool the four GDDR7 memory chips loaded onto the rear of the PCB.

You only get 16 GB of VRAM on the RTX 5060 Ti by doubling the four RAM chips used on the 8 GB model. That's some simple maths I can get down with. In practical terms, however, that means using what's called 'clamshell' mode to stick another four 16 Gb (2 GB) chips on the opposite side of the PCB to the GPU. These memory chips are not covered by the GPU's heatsink and will run hotter without some other cooling solution in place.

On the Gaming Trio, some thermal pads are applied between them and the backplate, which then acts as a surrogate heatsink. Aww. Though you can get away with none, as proven by the Palit RTX 5060 Ti 16 GB Infinity 3 with a plastic backplate, no pads, and a maximum average temperature across all chips during a never-ending loop of 3DMark Steel Nomad only marginally higher than this Gaming Trio (68°C to 62°C).

Still, data be damned, I don't like the idea of leaving these memory chips without any cooling over years of use. So, that's one benefit to the MSI Gaming Trio. Another are its dashing good looks.

This is by far the best-looking of the three cards I've tested. Admittedly, that's only three cards, but the other two I'm not a fan of, and they're the affordable ones. I'm a sucker for translucent plastic on just about anything—I was a see-thru Game Boy Advance kid—and there's some of that effect to the shroud on this card. It has RGB lighting zones on either side of the central fan and around the MSI badge on the tip of the card, which is easy to see through the glass-fronted MSI Pano 100R PZ case it's currently mounted in.

Good-looking, runs cool, well-built… this card has a lot going for it. But then we do have to talk about the price at some point.

In my Palit RTX 5060 Ti 16 GB Infinity 3 review, you'll find this sentence: "You should walk away immediately at anything above $550. That's RTX 5070 money." Now, want to take a guess as to the price of the Gaming Trio?

It's $550.

That's 28% more than the reference MSRP for this card. It's not that unusual for a third-party graphics card to charge the same price as the 'next tier up'—ie an RTX 5060 Ti for the price of an RTX 5070—but it's never an easy pill to swallow. A sign of the times to be charged over $500 for the bottom-rung GPU of the entire RTX 50-series, too.

This card is overclocked out of the factory to the tune of 2647 MHz, which is 75 MHz above the reference clock. That's not a large overclock for this card, a mere 3%, and we'll push it further in just a moment, but here's how it fares out of the box.

The RTX 5060 Ti is able to deliver a healthy performance bump over the RTX 4060 Ti 8 GB. In my tests, the Gaming Trio is 20% ahead at 1080p, 23% ahead at 1440p, and 41% ahead at 4K. I should qualify that we're talking pretty slim 4K frame rates most of the time, often sub-30 fps, and you'll need to tap DLSS 4 and Multi Frame Generation to access more playable frame rates—you can, of course, as this card supports all that.

PC Gamer test rig

CPU: AMD Ryzen 7 9800X3D | Motherboard: Gigabyte X870E Aorus Master | RAM: G.Skill 32 GB DDR5-6000 CAS 30 | Cooler: Corsair H170i Elite Capellix | SSD: 2 TB Crucial T700 | PSU: Seasonic Prime TX 1600W | Case: DimasTech Mini V2

Compared to the reference clocked Palit RTX 5060 Ti Infinity 3—the one with a plastic backplate—the extra money doesn't get you any more frames at 1080p or 1440p. They equal out for gains/losses across our benchmarking suite.

The Gaming Trio does run cooler than its counterparts—it's a couple of degrees lower than the Palit at stock clocks and way better than the PNY Dual Fan OC. The Gaming Trio is, as the name suggests, a triple-fan unit, but it's also a little larger than the Palit at 300 x 125 x 44 mm. That is MSI's measurement, inclusive of the PCIe slot. It's about 110 mm wide just the shroud itself. The other measurements seem bang-on.

The Gaming Trio also maintains its cool composure when overclocked, which is the one place where it really shines.

Many of the RTX 50-series cards I've tested have kept power limits under lock and key. Not the Gaming Trio. You're free to tinker with the power limit, voltage, and, of course, clock speeds to your liking. I managed to push this card with a 410 MHz offset, which is pretty high considering the factory OC of 75 MHz already applied, and altogether pushes the card in excess of 3100 MHz while gaming.

I also pushed the memory a little but ran into some instability, which forced me to dial back a touch. I'm sure with a little more time, someone could cook up a mean overclock on this card and drop back the power demands, which would be the ideal scenario for long-term use.

This untuned overclock netted me a 10% increase in average frame rates at 1080p. 10%. That's excellent, and exactly what I'm after on an expensive third-party card such as this. At 1440p, that shrinks a little to just under 9%. I also managed to push the core clock on the Palit Infinity 3 with a 400 MHz offset, which handed me almost as much of a leap, but it is still slower than the MSI. The Gaming Trio leads it by 2.4% at 1080p and 1.5% at 1440p.

? You want to overclock the RTX 5060 Ti: Even with a fairly lazy overclock, you can pull 10% higher frame rates from this graphics card. That's immense.

? You could throw more money at your GPU: You can find an RTX 5070 for near to the price of this RTX 5060 Ti. Albeit likely not as high-quality cards, but I'd rather the bigger GPU in the long-run.

You don't often see these sorts of gains in either clock speed or resulting frame rates on modern processors. Take 'em while you can. The big companies don't like to leave performance on the table like they used to, which has made us wonder why Nvidia's left so much headroom on the RTX 50-series. Their loss, our gain.

Even with a lick more megahertz, the RTX 5060 Ti is still nowhere close to the RTX 5070 in terms of raw performance. That's an awkward admission to make with the MSI stalking the better card's price tag.

But would you get an RTX 5070, especially one in a card of this quality, for anything close to $550? That's a good question. Right now, as of April 17, 2025, you can buy an Asus Prime RTX 5070 for $600. That should be an MSRP card, and it's unlikely to have all the bells and whistles on this MSI, but it houses a much larger chip and makes better use of its 12 GB of memory for it. In the UK, you can buy an RTX 5070 for ?500—or ?30 less than MSRP—which is the same price as MSI will ask for this card in dear ol' Albion. That's a very basic model, not that dissimilar to the Palit I've reviewed, but it's darn affordable.

By far my favourite RTX 5060 Ti of the lot so far, the MSI has been built to a much higher standard than its cheaper counterparts. That does count for something, but not quite the $121 premium it's asking over the MSRP models. If you can splurge on this MSI, you can probably also save another $50 for an RTX 5070, or just wait for that card to drop to $550. It will happen, eventually, and I'd much rather the RTX 5070 for the same money in the long-run.

]]>I've found myself especially tempted by the Zotac RTX 5060 Ti Solo, a dinky thing with a single fan. It's just been announced by Zotac, alongside Amp and Twin Edge (OC) designs for RTX 5060-series cards.

Single-fan cards are nothing new, of course—especially not for Zotac—but something about the RTX 5060 Ti Solo tickles my fancy in just the right way.

I'm not sure whether it's the diagonal lining, subtle brown colouring, or just the fact that it's a relatively low-power GPU (compared to the rest of the RTX 50 series) that will presumably not be too hampered by the stubby single-fan design—whatever the reason, something about it is calling out to me the current GPU market, which is a hellscape of out of stock signs in the US and apparently completely fine in the UK.

Nvidia's RTX '60 Ti cards are often good choices for SFF builds because they tend to offer decent entry-level current-gen performance without all the heat production and power guzzling of RTX '70 and '80 cards. In our Jacob R's RTX 5060 Ti review, he found the latest Nvidia GPU to perform about 20% better than the RTX 4060 Ti and only consume a little more power and produce just a little more heat (though much depends on the specific AIB design, of course).

We tested two triple-fan cards for that review, and both largely stuck around the mid 60 degrees celsius mark. The twin-fan PNY RTX 5060 Ti Dual Fan OC, however, hit mid 70 degrees at times. This single-fan design? Well, let's just say a 180 W TGP is quite a lot for a compact card to dissipate, but it's not impossible.

We're not given any actual pricing for the RTX 5060 Ti Solo yet, though, so I might have to eat my words. But let's be optimistic, eh?

About this particular card, Zotac says: "For true SFF-PC enthusiasts, the single-fan ZOTAC GAMING GeForce RTX 5060 SOLO remains a top choice for its maximum compactness, and the ability to sit comfortably in 99% of PC cases on the market. Despite being the smallest GPU of ZOTAC GAMING’s 50 Series line-up, the compact SOLO offers the same great performance."

That 99% figure comes from the fact that it's two slots wide, and of course a little horizontally challenged—which is a good thing. Zotac's well into the SFF market, as we've seen with the Zotac ZBox Magnus One SFF gaming PC, and I reckon a single-fan RTX 5060 Ti makes a lot of sense as another step in this direction.

That, or I'm just looking for ways to justify finally upgrading my GPU… don't judge, okay.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Yes, an MSRP-priced RTX 5060 Ti 16 GB is, in fact, in stock in the UK right now. In fact—hold up—two MSRP-priced RTX 5060 Tis are in stock: the Gainward GeForce RTX 5060 Ti Python III for ?399.95 at Overclockers and the Palit GeForce RTX 5060 Ti Infinity 3, also for ?399.95 at Overclockers.

It should be noted that these are essentially the same card (thus, probably, why these two are the MSRP-priced ones in stock). Palit owns Gainward, and the two cards share the same specs and even look very similar.

The Palit card also happens to be the exact one that our Jacob R tested for his RTX 5060 Ti review. He found it to perform about 20% faster than the RTX 4060 Ti, although it is, of course, a little more power-hungry. And don't forget that it has all the benefits of the 50-series architecture, including the ability to generate fake fra- sorry, I mean, to generate multiple frames for each traditionally rendered one.

The big thing here, though, is simply that these are ?399 MSRP cards (okay, technically ?0.95 over MSRP at ?399.95, if we're being pedantic) that are actually in stock two hours after launch. And that's not me being facetious—I don't think we've seen an MSRP card in stock for so long since before Covid (back in the glory days).

Your guess as to why that might be is as good as mine. The listings do say they're available to "UK and IE only due to high demand", and if the little red Overclockers pop-up is to be believed, a fair few have been sold already. Perhaps Palit/Gainward just hasn't realised how biting the cost of living crisis is over here and therefore how low demand is—who knows?

Whatever the case, they're here, and they seem to be staying here, at least for the moment. How long that moment will last, I'm not sure. I know it's certainly tickling my RTX 3060 Ti-owning 'should I?' bone. Maybe I should hit that buy button… for king and country?

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

The release notes (PDF) for the new Nvidia driver (version 576.02) list 25 "Fixed General Bugs" and 15 "Fixed Gaming Bugs" (and yes, I did count those line by line). Crucially, these general fixes include ones for various black and blank screen issues.

We've seen fixes for the infamous black screen issues pop up in GeForce driver notes before, but nothing on this scale. I counted 12 (twelve!) general fixes that mention "black" or "blank" screen in them, including one aptly titled "Random Black Screen issues." There are others listed that are probably to do with black/blank screens, too.

Another fix caught my eye, too, this being… ah, yes, 5117518—we all know 5117518, right? This is the issue identifier for a problem with Varjo Aero VR headsets seemingly not working with RTX 5090 graphics cards, which we reported on back in March.

Just a few days later, Nvidia acknowledged the problem as an "open issue." Now, one month later, it seems the problem is solved, alongside about a billion others.

Of course, apart from these extensive apparent bug fixes, the driver also has something positive on offer. Primarily that it's required to get your new RTX 5060 Ti up and running.

Nvidia says, "This driver is required for users adding the new GeForce RTX 5060 Ti to their systems. It also includes support for games adding or launching with DLSS 4 with Multi Frame Generation, including Black Myth: Wukong and No More Room in Hell 2."

In addition to this, there's "support for 19 new G-SYNC Compatible displays" and "6 new Optimal Playable Settings profiles" for Deadlock, GTA V Enhanced, Half-Life 2 RTX, inzoi, Monster Hunter Wilds, and The Last of Us Part II Remastered.

Presumably, we'll now be able to play these games with little risk of a random black screen. Fingers crossed, anyway.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

With prices up in the air and what some might call 'macro economic headwinds' affecting global moods, I'm not sure how well this 'good deal' statement will age. In fact, I've just had a look around at retailers in the hours preceding publishing this review and the 8 GB card costs as much as the 16 GB card and the 16 GB card is just under $500. That's not good.

Yet in my pre-release isolation, I've been reasonably impressed with the RTX 5060 Ti 16 GB.

It offers a decent upgrade on the RTX 4060 Ti from a relatively small improvement to its silicon, spurred on by much speedier memory. Its has little 'wow' factor, slipping in right where you'd expect it to in the existing stack, but for a more competitive price tag than last-gen models. It's faster than an RTX 4060 Ti but not close enough to the RTX 5070 that a judicious overclock will cannibalise that card—though it does take to overclocking with aplomb.

I'll be focussing on the 16 GB version of the RTX 5060 Ti for this review. Specifically, the Palit RTX 5060 Ti Infinity 3 16 GB, which I'm told will be available at MSRP in the UK. We've not been provided an 8 GB model for launch, though I have included figures for another two 16 GB cards: the PNY RTX 5060 Ti OC, which will be available at MSRP in the US; and the MSI RTX 5060 Ti Gaming Trio OC Edition.

RTX 5060 Ti 16 GB verdict

? You want an efficient graphics card for 1080p or 1440p: A 20% performance uplift over the previous generation for just 20 W more. That's impressive scaling.

? You are on an older graphics card, perhaps a GTX: You can probably live without the extra 20% and Multi Frame Generation if you're already rocking an RTX 40-series graphics card. However, as someone on an older card, the RTX 5060 Ti offers a great upgrade path, providing it's something close to MSRP.

? You want more VRAM and the means to use it: The 16 GB version of this card is more appealing for all that VRAM, but this GPU isn't totally equipped to put it to great use at higher resolutions.

? Prices end up way over MSRP: Perhaps I don't need to say it, but if you're spending over $500 on this card, you're no longer getting a good deal. Over $550 and you're paying RTX 5070 money…

The RTX 5060 Ti 16 GB is a good entry-level graphics card and a worthy upgrade for some PC gamers.

It benefits from a power-efficient architecture, offers a real-terms performance uplift over the previous generation, and wields a higher VRAM configuration that, at MSRP, is without a controversial price premium. It's a good proposition, even if you prefer to wave away nascent AI-powered features like Multi Frame Generation.

It's an especially good proposition if you're looking to upgrade from an older graphics card, especially one beginning with 'GTX'. Nvidia does recommend using a 600 W power supply with this card, which might nix an easy upgrade for some, but even Intel's Arc B580 requires a 600 W PSU, so you might just have to suck it up and buy a new one if you intend to upgrade.

The RTX 5060 Ti naturally excels at 1080p, though 1440p is easily within its reach. If you're playing a game with support for Multi Frame Generation, you can dual-wield it and DLSS for genuinely impressive frame rates even at the higher resolution. Just be cautious of overstretching the card, both with and without MFG, as it only features slightly improved specs compared to its predecessor, the RTX 4060 Ti. Yet that and its 16 GB of speedy GDDR7 is enough to maintain around a 20% lead at 1080p and 1440p in our testing.

Available in two memory configurations, 16 GB and 8 GB, the latter feels like a small amount of memory in 2025, especially as there have been many cards over the years with more memory for a similar price, including the cheaper Arc B580. But I've yet to see much evidence that the larger VRAM buffer will be a huge boon on what is ostensibly a small GPU compared to others in the RTX 50-series, and intended for sub-4K resolutions. 16 GB is nice, but it's nicer when the GPU and memory bus can make the most of it.

There are big question marks over price and availability with this card, due to prior and ongoing issues affecting the existing RTX 50-series. And then there's AMD's teased but not yet officially announced RX 9060-series, which should arrive within a couple of months. If all that sounds like reasons to hold off purchasing a new graphics card until later in the year, you might be saving yourself some hassle. But if you can't wait any longer and your graphics card is on its last legs, the RTX 5060 Ti at, or near, MSRP is a smart buy.

RTX 5060 Ti 16 GB specs

Let's start with the top line, the all-important specifications. The RTX 5060 Ti is a pretty good deal on paper. It features more cores, more RT chops, higher clocks, and AI TOPS than its predecessor, the RTX 4060 Ti, and for nominally less cash.

There's slightly less to get excited about when you dive into the GPU silicon, though. An increase in Streaming Multiprocessors (SMs) of just 5.88 % cascades through the core counts, RT cores, and Tensor cores. That's hardly a big number to wave in your friends' faces once you get hold of this card. However, the move to much faster GDDR7 memory with the RTX 50-series offers a much more bragworthy digit of a 55.55% increase in memory bandwidth to 448 GB/s, to match an increase in memory speed from 18 Gbps to 28 Gbps.

That memory speed and bandwidth improvement is even more pronounced compared to last generation on the lower-end of the RTX 50-series, as the cheaper RTX 40-series cards used slower GDDR6 memory instead of the GDDR6X chips found on enthusiast models.

That's not the only reason to focus on memory with the RTX 5060 Ti: it's available in 16 GB or 8 GB variants.

All of my testing in this review is for the 16 GB variant, with three models of the 16 GB card being the first to arrive on my desk. This card's predecessor, the RTX 4060 Ti, also came in both 8 GB and 16 GB variants, but the larger capacity model arrived later and to little fanfare.

To help put the inevitable memory debate into perspective, the RTX 4060 Ti 16 GB was a tough sell at launch. Launching later than its 8 GB variant for $100 more, it only really benefited a few niche cases, and often not by much. It felt like a cynical launch to us; a reaction to the discontent brewing for a near-$400 card with 8 GB of memory and a 128-bit memory bus. Even Nvidia seemed reluctant to talk much about the card, and that's why we never saw one for review. As such, all of my results for the RTX 4060 Ti are for the 8 GB variant.

Has much changed with the RTX 5060 Ti 16 GB?

Exactly like its predecessor, the RTX 5060 Ti opts for the same, rather paltry, 128-bit memory bus across both variants. For the 16 GB model, it uses a 'clamshell' memory configuration. Essentially, this means that memory has been attached on both sides of the PCB—the memory acting as the bread of the sandwich, so to speak—requiring cooling pads affixed to the graphics card's backplate. Increasing the memory capacity in this way doesn't increase throughput, as memory bus width and speed dictate that, but there's less chance of a hoggish game running out of room and hampering performance.

Yet, unlike the RTX 4060 Ti 16 GB, which cost $100 more than the 8 GB version, the RTX 5060 Ti 16 GB costs just $50. That puts it much more in the mix, providing real-world prices actually reflect this.

Memory matters most when gaming at higher resolutions, ie 4K, but we're mostly talking about 1080p/1440p performance in regards to the RTX 5060 Ti. That bears out in my game testing in the performance section below, where the RTX 5060 Ti 16 GB is able to extend its lead over the RTX 4060 Ti 8 GB to a much greater extent at 4K compared to 1440p and 1080p.

But I want to be clear about my thoughts on future-proofing and VRAM: opting for the 16 GB variant is not a panacea for performance in four, five, or six years' time. It might help with the odd case of a game running like pants due to poor optimisation, though these issues can be, and often are, fixed with a little dev work. Ultimately, the fundamentals remain the same either way: the GA206 GPU and 128-bit bus will ultimately end up limiting factors for future performance.

The RT cores, Tensor cores, and CUDA Core counts are still likely to wane in efficacy over time, as new games make better use of more modern features. You're still better off saving up for the xx70 card or a Radeon option if memory performance and longevity matter most to you.

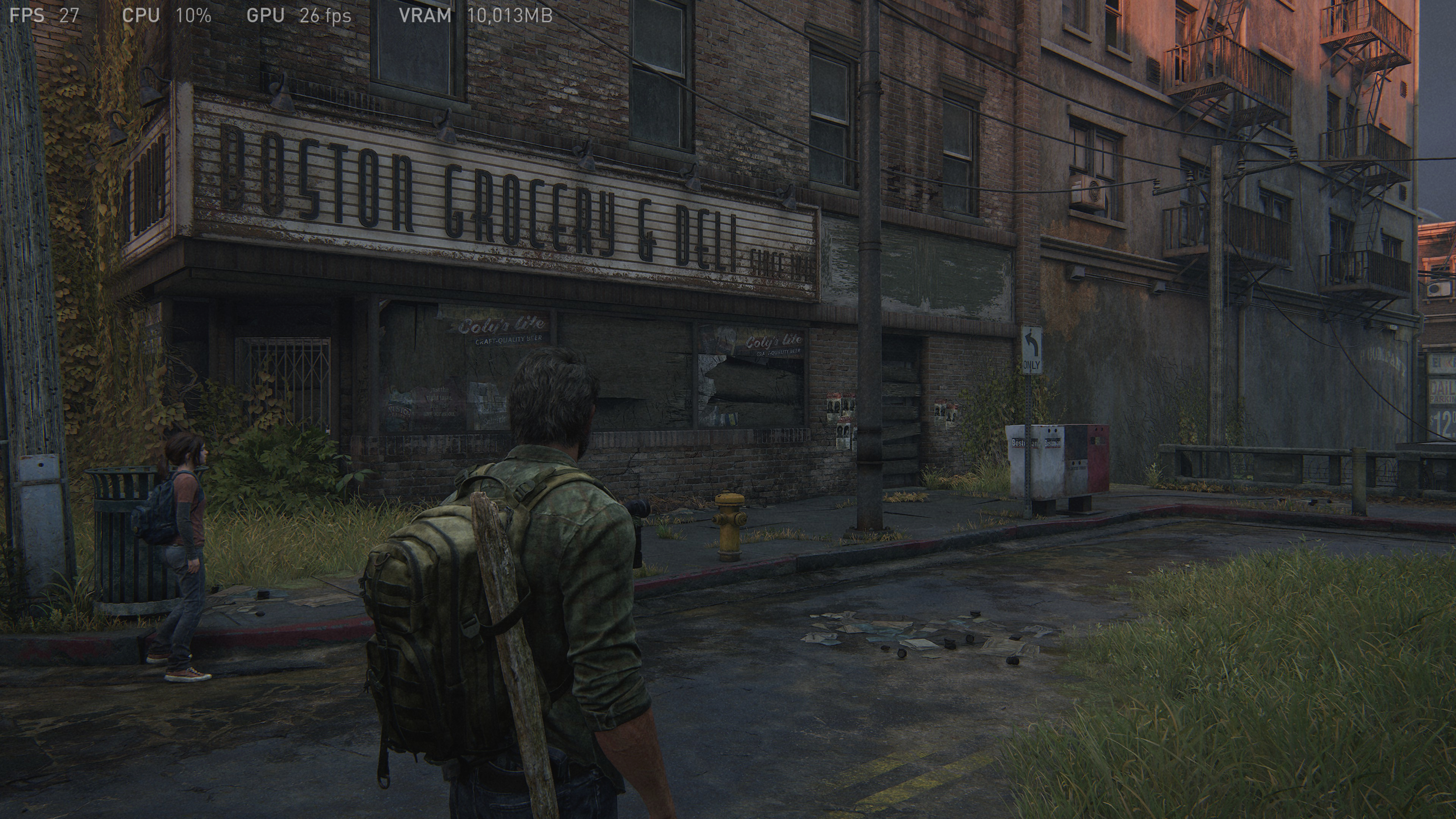

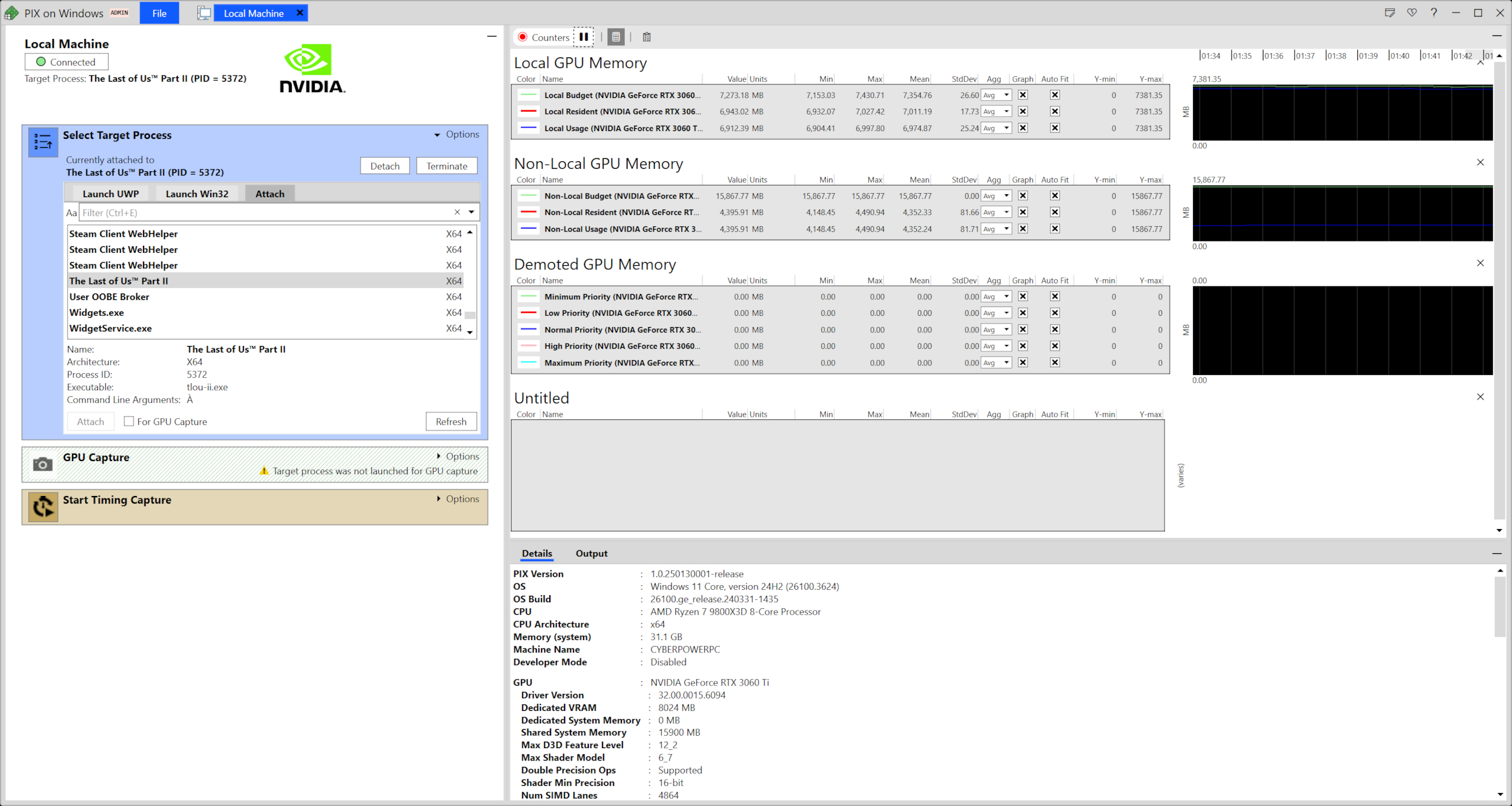

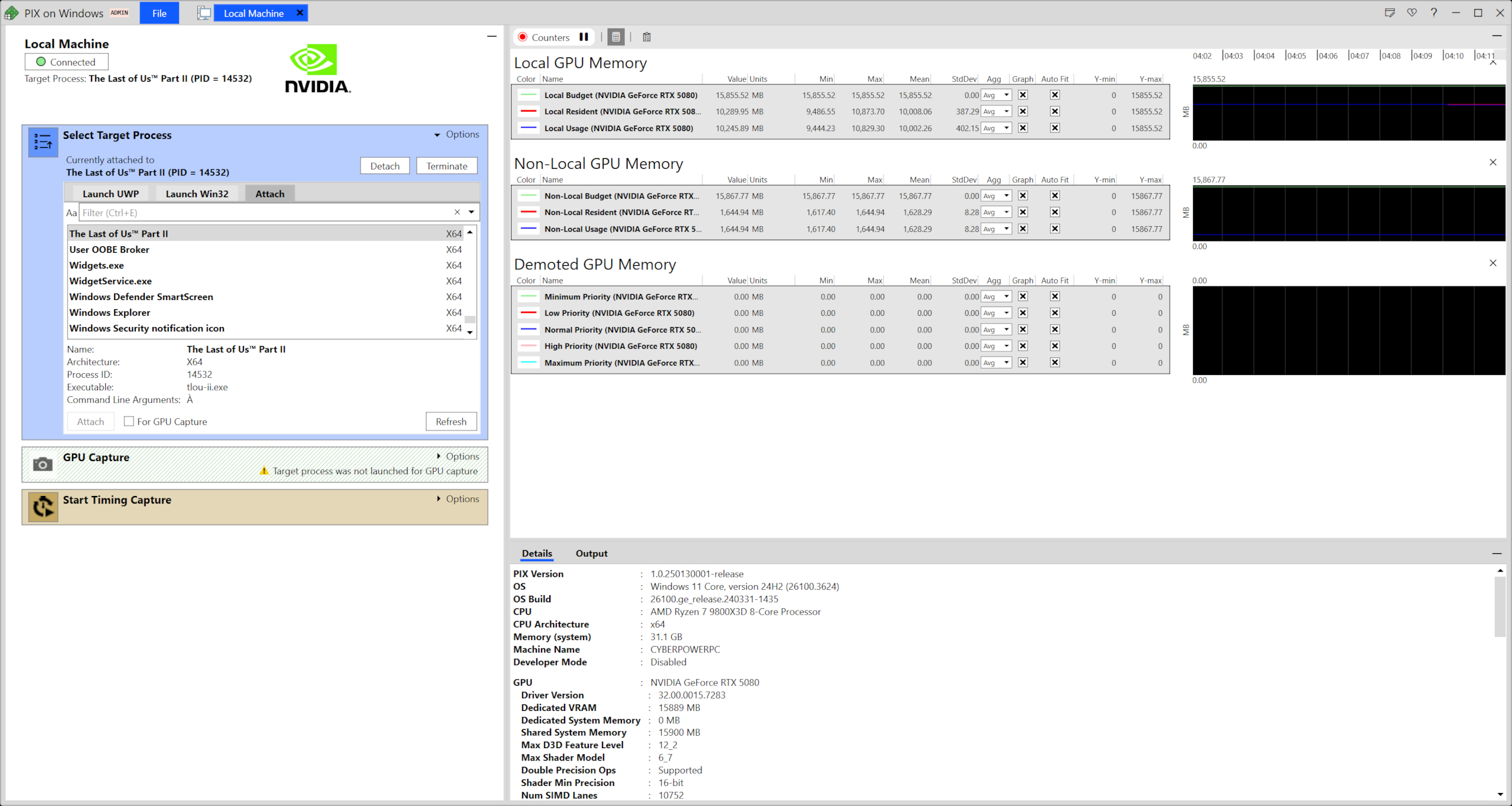

And there have been some edge cases for high VRAM memory usage, such as The Last of Us Part 1, but we've since seen memory usage improvements with its sequel. The Last of Us Part 2 uses a smart asset management system that improves VRAM usage and doesn't trip over its own shoelaces when presented with an 8 GB card. That's not to say all games will follow in its footsteps, but it's a promising sign.

The ideal solution is that we see Nvidia adopt 12 GB as a standard on its low-end cards and ditch 8 GB altogether, while matching existing prices. That is, rather than this 16 GB stop-gap on a GPU not designed to make the most of it. Nvidia argues that 8 GB helps keep costs low globally, in markets beyond Europe and the US, which is fair, and I don't have the bill of parts in front of me to belabour the point, but perhaps bringing back a desktop xx50 card would help solve that one?

So, would I buy the 16 GB card if presented with it for $50 more than the 8 GB option? Sure. Therein lies the duality of PC gamers and our allergy to reason when building PCs. Altogether, we can chalk up the 16 GB card as a 'nice to have', but I wouldn't pay over the odds for it.

Moving on, the RTX 5060 Ti runs faster than most RTX 50-series cards. That's to be expected for a smaller die, and the GB206 is the smallest of the lot at 181 mm2. With a reference boost clock of 2572 MHz, it is only a small amount slower than the RTX 5080 FE at 2620 MHz, but its base clock is the highest yet at 2400 MHz.

That's for a 180 W TGP (total graphics power)—20 W higher than the RTX 4060 Ti and 70 W lower than the RTX 5070.

What's pretty impressive is how each one of those extra watts over the RTX 4060 Ti converts neatly into a single percentage point gained in our testing at 1080p and 1440p—the RTX 5090 at nearly 600 W could only dream of that sort of power scaling.

There is no Founders Edition for the RTX 5060 Ti in either 16 or 8 GB variants. That's a shame, as we've come to appreciate the Founders Edition model for its low temps and noise with other 50-series GPUs, but most of all for its price. MSRP cards are not often found in today's world, and the lack of Founders Edition from Nvidia only serves to remove another opportunity to buy an RTX 5060 Ti at the asking price.

Palit RTX 5060 Ti Infinity 3 specs

With no Founders Edition, I'll be focusing on the Palit RTX 5060 Ti Infinity 3 and for this review. I've been assured this will launch at MSRP in the UK. That's ?399 for the 16 GB model, or ?349 for 8 GB. Whether this specific card will be widely available in the US or close to MSRP is still a mystery, however.

We've also tested the PNY RTX 5060 Ti Dual Fan OC, which we've been promised is an MSRP model in the US, and the MSI RTX 5060 Ti Gaming Trio OC Edition, which is going to be priced higher. So, all bases are covered in the performance section below.

What you get with the Palit Infinity 3 is a triple-fan shroud with a slim heatsink, measuring 290 x 102.8 x 38.75 mm. It's nowhere near as thick as the MSI RTX 5080 Ventus 3X OC I've close to hand, which I thought to be a fairly slim GPU, but the Infinity 3 is only aiming to dissipate a reasonable 180 W and sticks to reference clock speeds.

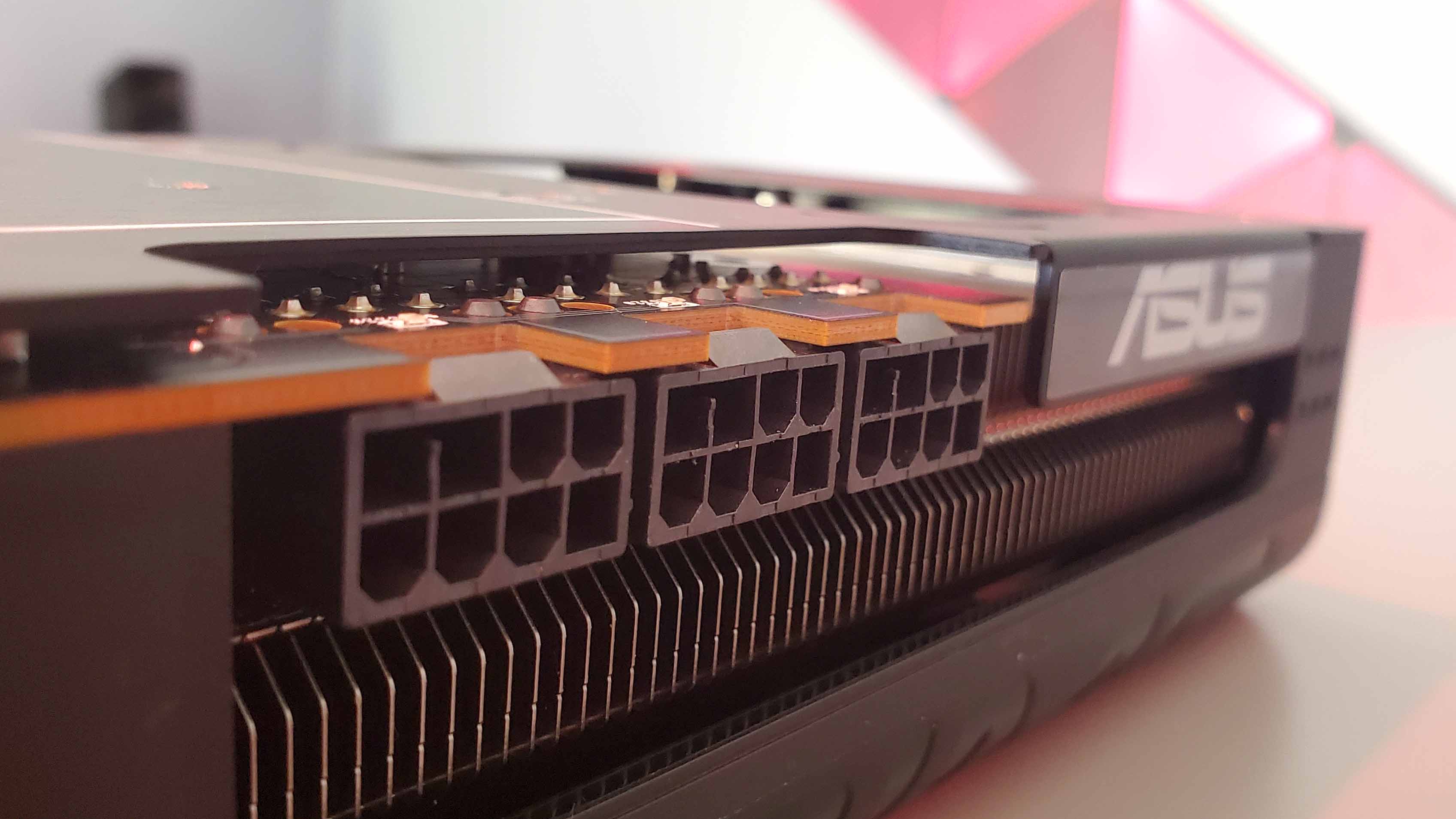

I was slightly surprised to see the single 8-pin power connector on this card, as opposed to the 12V-2x6 connector on the MSI card. Though the PNY showed up shortly after the other two and also uses a single 8-pin power connector, so that might be a bit of a theme.

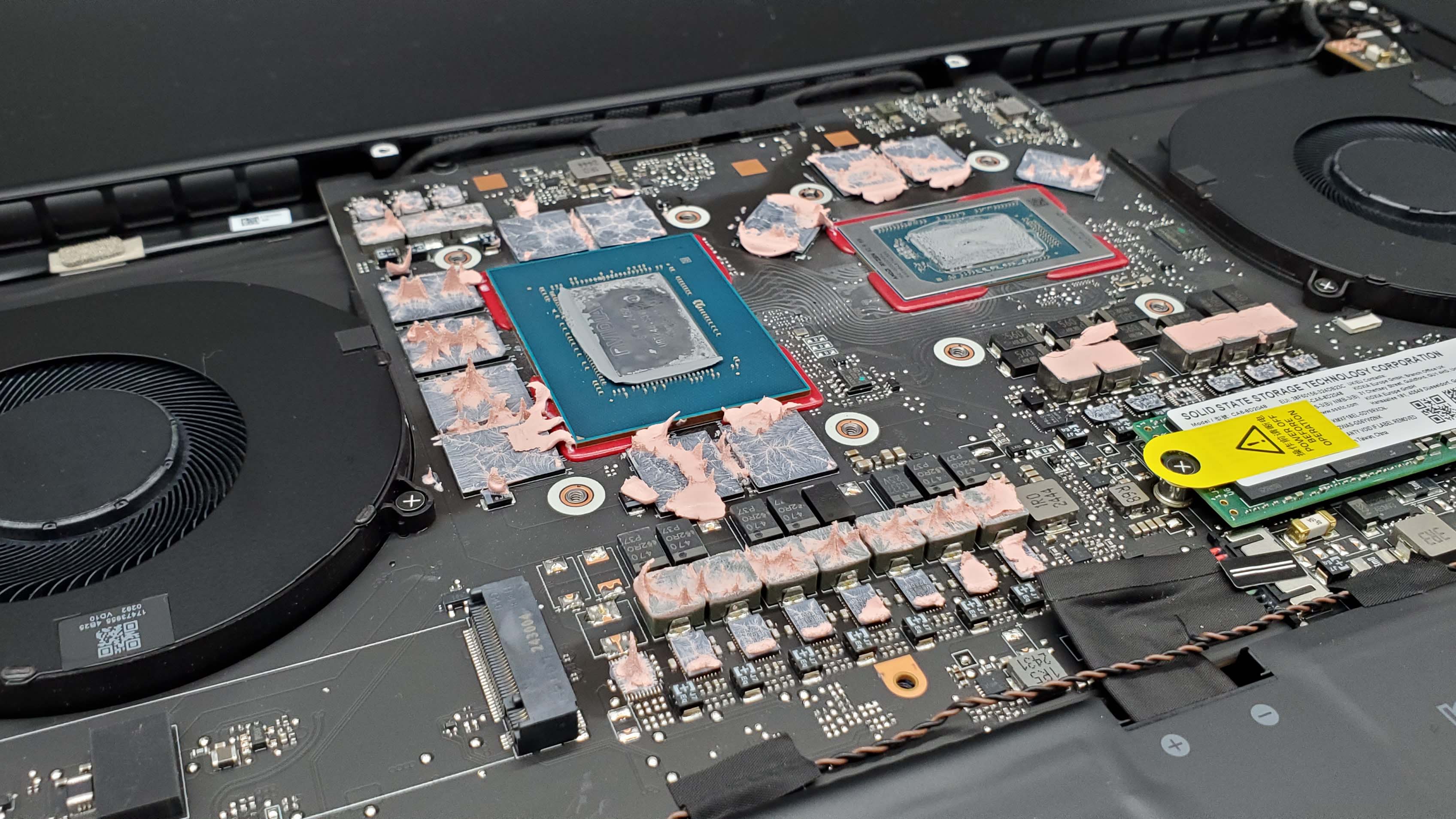

Opening up the rear of the Palit Infinity 3 to gaze upon its clamshelled memory, I was surprised to find there are no extra thermal pads to cover these rearward chips. In fact, the entire backplate is made of plastic, so it wouldn't be a wise idea anyway. That makes for a stark comparison to the MSI Gaming Trio OC and PNY OC, which both feature a metal backplate and a couple of thermal pads for those rear memory chips.

Due to the lack of thermal pads, I've not pushed a memory overclock on this card, as I usually would the RTX 50-series. I kept an eye on memory junction temperatures while benchmarking Metro Exodus, and they stayed below 70°C. This suggests there's no major issue at stock speeds, though these are average temperatures across all memory chips, which might hide the worst fluctuations on those specific rear-facing chips.

RTX 5060 Ti 16 GB performance and benchmarks

A 20% improvement versus the last generation, that's what Nvidia was touting for this card before we got our hands on it, and it's a fair assessment. My own testing bears that out, with the RTX 5060 Ti 16 GB 20% faster than the RTX 4060 Ti 8 GB at 1080p, and 23% at 1440p—that's raw raster, too, upscaling and frame generation notwithstanding.